Results for “becker” 166 found

Becker on the FDA

In the latest Milken Institute Review, Nobel laureaute Gary Becker argues (sign up required) that the FDA should permit drugs to be sold once they have passed a safety standard, i.e. a return to the pre-1962 system. He writes:

…a return to a safety standard alone would lower costs and raise the number of therapeutic compounds available. In particular, this would include more drugs from small biotech firms that do not have the deep pockets to invest in extended efficacy trials. And the resulting increase in competition would mean lower prices – without the bureaucratic burden of price controls…

Elimination of the efficacy requirement would give patients, rather than the FDA, the ultimate responsibility of deciding which drugs to try…To be sure, some sick individuals would try ineffective treatments that would otherwise have been prevented from reaching market under present FDA regulations. But the quantity of reliable health information now available with only a little initiative is many times greater than when the efficacy standard was introduced four decades ago.

Dan Klein and I have written extensively on this issue at our web site, FDAReview.org, and in our latest paper Do Off Label Drug Practices Argue Against FDA Efficacy Requirements?

Thursday assorted links

1. MIE: Robinson Crusoe tourism.

2. “An estimated 500,000~ women in the United States between 18-24 are content creators on Onlyfans.”

3. New Freakonomics radio podcast series on how to succeed at failing.

4. “Celebrities made this mainstream.” Recommended.

5, Want to send a comment to the IRS about proposed new crypto tax reporting rules? The polity that is AI.

6. The world’s first wearable e-reader?

7. Robert Irwin, RIP (NYT).

8. Brian Albrecht on why Armen Alchian is the GOAT. And Peter Isztin on Gary Becker.

*GOAT: Who is the Greatest Economist of all Time, and Why Does it Matter?*

I am pleased to announce and present my new project, available here, free of charge. It is derived from a 100,000 word manuscript, entirely written by me, and is well described by the title of this blog post.

I believe this is the first major work published in GPT-4, Claude 2, and some other services to come. I call it a generative book. From the project’s home page:

Do you yearn for something more than a book? And yet still love books? How about a book you can query, and it will answer away to your heart’s content? How about a book that will create its own content, on demand, or allow you to rewrite it? A book that will tell you why it is (sometimes) wrong?

To be clear, if you’re not into generative AI, you can just download the work onto your Kindle, print it out, or read it on a computer screen. Yet I hope you do more:

One easy place to start is with our own chatbot using GPT-4, and we’ll soon provide custom apps using Claude 2 and Llama 2. In the meantime we’ve provided instructions for how to experiment with them yourself.

Each service has different strengths and you should try more than one. You’ll see the very best performance by working with individual chapters using your own subscription to ChatGPT, Claude, or a similar service. The chapters can be read independently and in any order. Ask the AI if you’re lacking context. Try these sample questions to start.

You can ask it to summarize, ask it for more context, ask for a multiple choice exam on the contents, make an illustrated book out of a chapter, or ask it where I am totally wrong in my views. You could try starting with these sample questions. The limits are up to you.

Here is the Table of Contents:

1. Introduction

2. Milton Friedman

3. John Maynard Keynes

4. Friedrich A. Hayek

5. Those who did not make the short list: Marshall, Samuelson, Arrow, Becker, and Schumpeter

6. John Stuart Mill

7. Thomas Robert Malthus

8. Adam Smith

9. The winner(s): so who is the greatest economist of all time?

The site address is an easy to remember econgoat.ai. And as you will see from the opening chapter, it is not only about economics, it is also a very personal book about me. No Straussian here, I tell you exactly what I think, including of my personal meetings with Friedman and Hayek. If, however, you are looking for a Straussian reading of this project — which I would disavow — it is that I am sacrificing “what would have been a normal book” to the AI gods to win their favor.

And apologies in advance for any imperfections in the technology — generative books can only get better.

Recommended.

What I’ve been reading

Naomi Klein, Doppelganger: A Trip into the Mirror World. Have you ever been confused by Naomi Klein vs. Naomi Wolf? Intellectually they are both pretty crazy. And they are both named Naomi. Some might think they bear some resemblance to each other. Well, here is a whole book on that confusion! And it is written by Naomi Klein. How much insight and self-awareness can one intellectually crazy person have about being confused for another intellectually crazy person? Quite a bit, it turns out. Recommended, though with the provision that I understand you never felt you needed to read a whole book about such a topic.

Benjamin Labutut, The Maniac. Chilean author, he has penned the story of von Neumann but in the latter part of the book switches to contemporary AI and AlphaGO, semi-fictionalized. Feels vital and not tired, mostly pretty good, thoiiugh for some MR readers the material may be excessively familiar.

J.M. Coetzee, The Pole. Short, compelling, self-contained, again deals with older men who have not resolved their issues concerning sex. Good but not great Coetzee.

Gary S. Becker, The Economic Approach: Unpublished Writings of Gary S. Becker. I am honored to have blurbed this book.

Richard Campanella, Bienville’s Dilemma: A Historical Geography of New Orleans is one mighty fine book.

Shuchen Xiang, Chinese Cosmopolitanism: The History and Philosophy of an Idea. Chinese cosmopolitanism, there was more of it than you might have thought. Should we be asking “Where did it go?” Or is it there more than ever?

Tail risk in production networks

This paper describes the response of the economy to large shocks in a nonlinear production network. A sector’s tail centrality, measures how a large negative shock transmits to GDP – i.e. the systemic risk of the sector. Tail centrality is theoretically and empirically very different from local centrality measures such as sales share – in a benchmark case, it is measured as a sector’s average downstream closeness to final production. It also measures how large differences in sector productivity can generate cross-country income differences. The paper also uses the results to analyze the determinants of total tail risk in the economy. Increases in interconnectedness can simultaneously reduce the sensitivity of the economy to small shocks while increasing the sensitivity to large shocks. Tail risk is related to conditional granularity, where some sectors become highly influential following negative shocks.

That is a new piece by Ian Dew-Becker, via Alexander Berger.

Wednesday assorted links

1. Lucidity.

2. The culture that is Washington, D.C.: Little League cheating allegations.

3. Human Challenge Trial for malaria?

5. Dan Klein on Big Brother and the digital dollar (WSJ).

6. Howard S. Becker, RIP (NYT).

Peter Isztin on the incidence of AI

This short paper considers the effects of artificial intelligence (AI) tools on the division of labor across tasks. Following Becker and Murphy (1992) I posit a “team” with each team member being assigned to a task. The division of labor (that is, the number of specialized tasks) is here limited not only by the extent of the market, but by coordination costs. Coordination costs stem from the need for knowledge in multiple tasks, as well as from monitoring and punishing shirking and other malfeasance. The introduction of AI in this model helps the coordination of the team and fully or partially substitute for human “generalist” knowledge. This in turn can make specialization wider, resulting in a greater number of specialized fields. The introduction of AI technologies also increases the return to fully general knowledge (i.e.education).

Not a certainty, but definitely worth a ponder. Here is the draft.

Plagues Upon the Earth

Kyle Harper’s Plagues Upon the Earth is a remarkable accomplishment that weaves together microbiology, history, and economics to understand the role of diseases in shaping human history. Harper, an established historian known for his first three books on Rome and late antiquity, has an impressive command of virology, bacteriology, and parasitology as well as history and economics. In “Plagues Upon the Earth.” he explains all of these clearly and with many arresting turns of phrase and insights:

Kyle Harper’s Plagues Upon the Earth is a remarkable accomplishment that weaves together microbiology, history, and economics to understand the role of diseases in shaping human history. Harper, an established historian known for his first three books on Rome and late antiquity, has an impressive command of virology, bacteriology, and parasitology as well as history and economics. In “Plagues Upon the Earth.” he explains all of these clearly and with many arresting turns of phrase and insights:

There are about seventy-three bacteria among major human pathogens–out of maybe a trillion bacterial species on earth. To imagine bacteria primarily as pathogens is about as fair as thinking of human beings as mostly serial killers.

Despite the tingling fear we still feel in the face of large animals, fire made predators a negligible factor in human population dynamics. The warmth, security and mystic peace you feel around the campfire has been instilled by almost two million years of evolutionary advantage given to us by the flames.

Mosquitos are vampires with wings….The blood heist itself is an amazing feat. Following contrails of carbon dioxide that lead to her mark, the female mosquito lands and starts probing. Once she reaches her target, she inserts her tube-like needle, as flexible as a plumber’s snake, into the skin. She pokes a dozen or more times until she hits her mark. The proboscis itself is moistened with compounds that anesthetize the victim’s skin and deter coagulation. For a tense minute or two, she pulls blood into her gut, taking on several times her own weight, as much as she can carry and still fly. She has stolen a valuable liquid full of energy and free metals. Engorged, she unsteadily makes her getaway, desperate for the nearest vertical plane to land and recuperate, as her body digests the meal and keeps only what is needful for her precious eggs.

What I like best about Plagues Upon the Earth is that Harper thinks like an economist. I mean this in two senses. First, his chapters on the Wealth and Health of Nations and Disease and Global Divergence are alone worth the price of admission. In these chapters, Harper brings disease to the fore to understand why some nations are rich and others poor but he is well aware of all the other explanations and weaves the story together with expertise.

The second sense in which Harper thinks like an economist is deeper and more important. He has a model of parasites and their interactions with human beings. That model, of course, is the evolutionary model. To a parasite, human beings are a desirable host:

Just as robbers steal from banks because that is where the money is, parasites exploit human bodies because there are high rewards for being able to do so…. for a parasite, there is now more incentive to exploit humans than ever…look at human energy consumption…in a developed society today, every individual consumer is the rough ecological equivalent to a herd of gazelles.

That parasites are driven by “incentives” would seem to be nearly self-evident but in the hands of a master simple models can lead to surprising hypothesis and conclusions. Harper is the Gary Becker of parasite modeling. Here’s a simple example: human beings have changed their environments tremendously in the past several hundred years but that change in human environment created new incentives and constraints on parasites. Thus, it’s not surprising that most human parasites are new parasites. Chimpanzee parasites today are about the same as those that exploited chimpanzees 100,000 years ago but human parasites are entirely different. Indeed, because the human-host environment has changed, our parasites are more novel, narrow, and nasty than parasites attacking other species.

It’s commonly suggested that one of the reasons we are encountering novel parasites is due to our disruptions of natural ecosystems, venturing into territories previously unexplored by humans, thereby releasing ancient parasites that have lain dormant for millennia. Like the alleged curse of Tutankhamun’s tomb we are unleashing ancient foes! Similarly, concerns are voiced that climate change, through its effect on permafrost melt, may liberate “zombie” parasites poised for retaliation. But ancient parasites are not fit for human hosts as they have not evolved within the context of the contemporary human environment. So, while I don’t trivialize the potential consequences of melting permafrost, I think we should fear much more relatively recent diseases such as measles, cholera, polio, Ebola, AIDS, Zika, and COVID-19. Not to mention whatever entirely new disease evolution is bound to throw in our path.

Indeed, one of the most interesting speculation’s in Plagues Upon the Earth is that “global divergences in health may have reached their maxima in the early twentieth century.” The reason is that urbanization and transportation turned the new diseases of the industrial era, like cholera, tuberculosis and the plague (the latter older diseases but ideally primed for the industrial era) into pandemics (also a relatively recent word) at a time when only a minority of the world had the tools to combat the new diseases.

Science, of course, is giving us greater understanding and control of nature but our very success increases the incentives of parasites to breach our defenses.

[Thus,] the narrative is not one of unbroken progress, but one of countervailing pressures between the negative health feedback of growth and humanity’s rapidly expanding but highly unequal capacities to control threats to our health.

Still under-policed and over-imprisoned

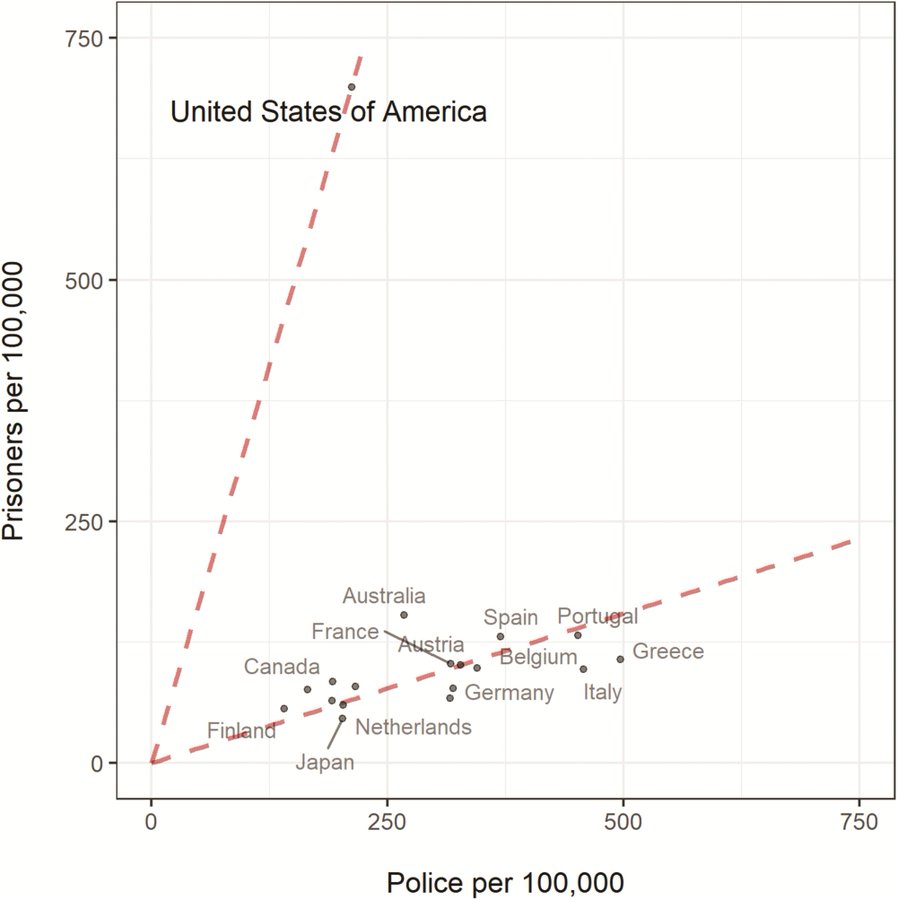

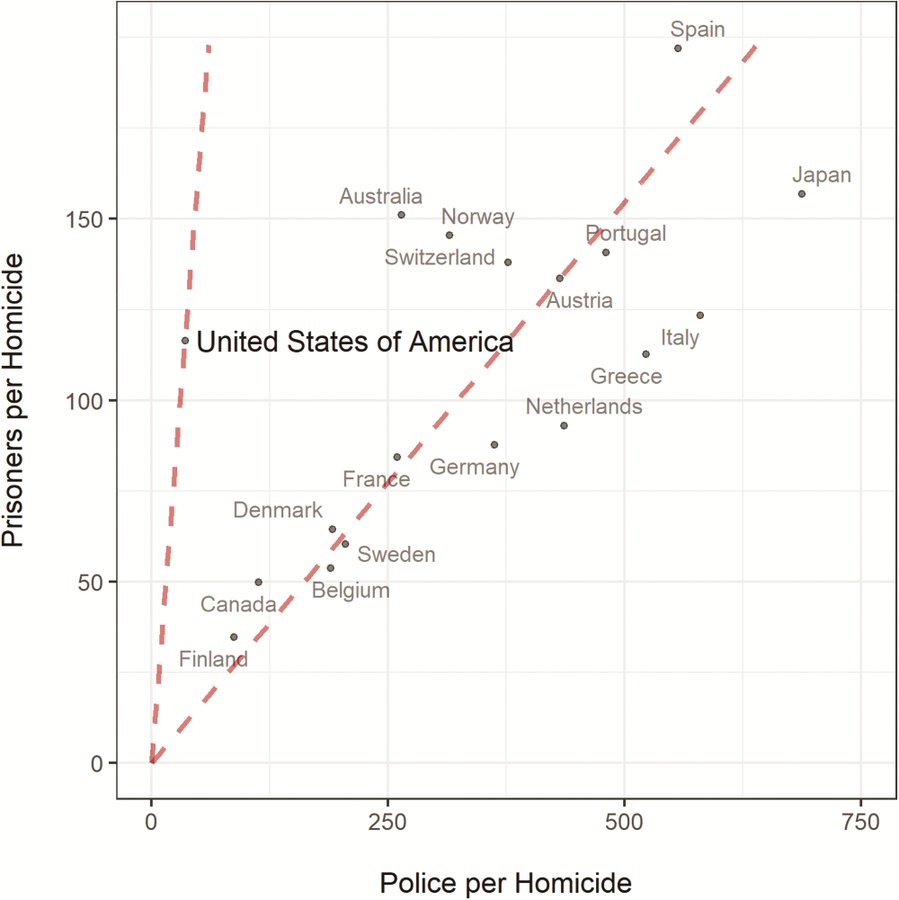

A new paper, The Injustice of Under-Policing, makes a point that I have been emphasizing for many years, namely, relative to other developed countries the United States is under-policed and over-imprisoned.

…the American criminal legal system is characterized by an exceptional kind of under-policing, and a heavy reliance on long prison sentences, compared to other developed nations. In this country, roughly three people are incarcerated per police officer employed. The rest of the developed world strikes a diametrically opposite balance between these twin arms of the penal state, employing roughly three and a half times more police officers than the number of people they incarcerate. We argue that the United States has it backward. Justice and efficiency demand that we strike a balance between policing and incarceration more like that of the rest of the developed world. We call this the “First World Balance.”

First, as is well known, the US has a very high rate of imprisonment compared to other countries but less well known is that the US has a relatively low rate of police per capita.

If we focus on rates relative to crime then we get a slightly different but similar perspective. Namely, relative to the number of homicides we have a normal rate of imprisonment but are still surprisingly under-policed.

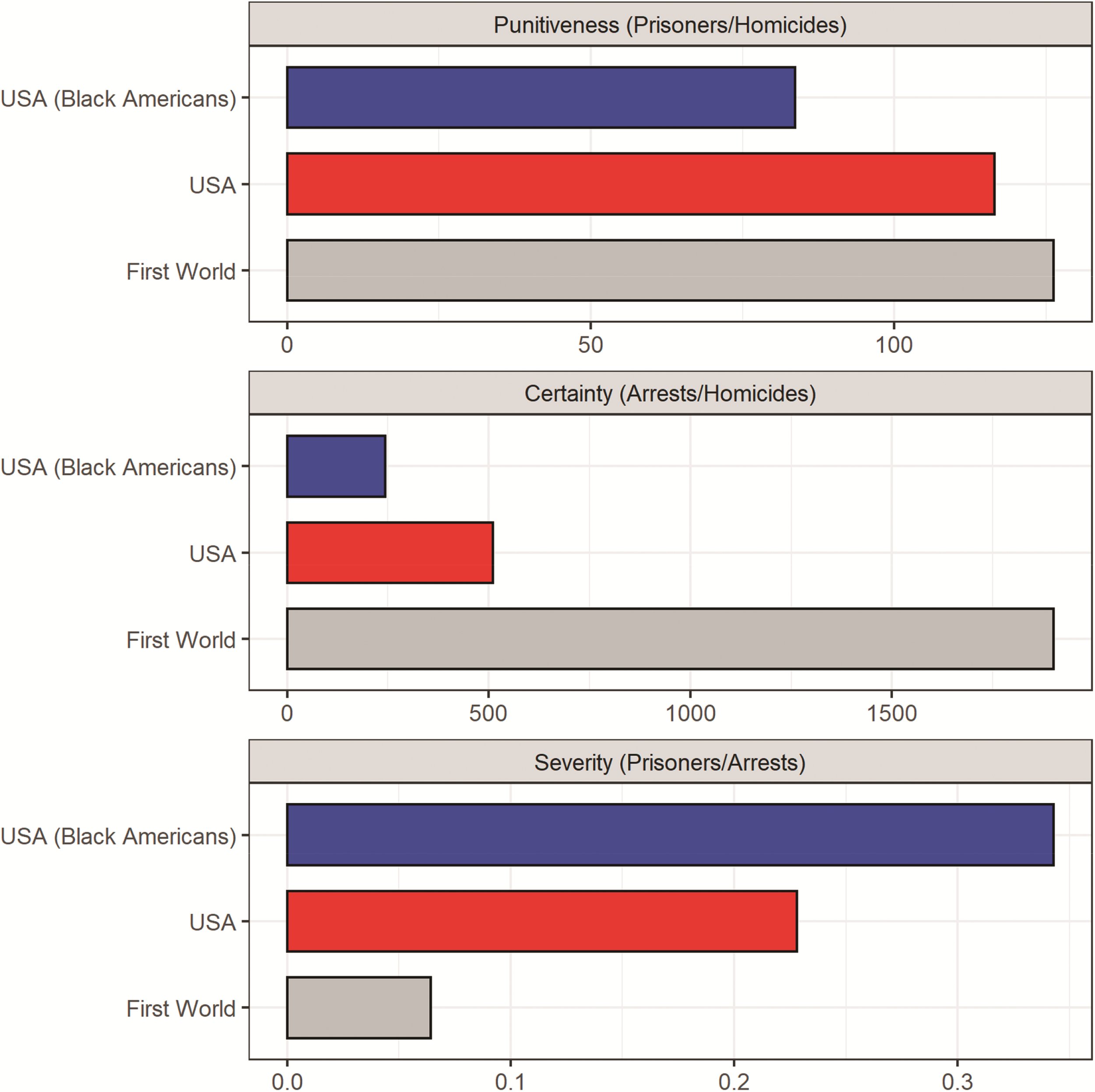

As a result, as I argued in What Was Gary Becker’s Biggest Mistake?, we have a low certainty of punishment (measured as arrests per homicide) and then try to make up for that with high punishment levels (prisoners per arrest). The low certainty, high punishment level is especially notably for black Americans.

Shifting to more police and less imprisonment could reduce crime and improve policing. More police and less imprisonment also has the advantage of being a feasible policy. Large majorities of blacks, hispanics and whites support hiring more police. “Tough on crime” can be interpreted as greater certainty of punishment and with greater certainty of punishment we can safely reduce punishment levels.

Hat tip: A thread from Justin Nix.

Immigration as a problem of “optimal harvesting”

Assume you are judging immigration policy from a nationalistic point of view for the receiving country. Furthermore, assume that you have in place, or could have in place, a mechanism for positive selection of migrants. That is, you can attract some real talent, some Edward Tellers and Piet Mondrians.

I then wonder if for any desired amount of immigration, whether it should not be taken from countries with equal or lower birth rates than the receiving country.

Let’s say you have country X — Ruritania — with a relatively high birth rate. In that country, the relatively talented people are having a fair number of children and producing a decent “rate of return” on their talent. Why pluck them from that multiplicative environment? A generation or two later, the prospects from that country will be all the brighter.

Conversely, if you take in talent from a country with an equal or lower birth rate to yours, you are not lowering the future rate of potentially talented babies born.

Note that if you wait to take in talent from Ruritania, it is not because you don’t want them. It is because you want more/better of them later in the future.

Furthermore, if you are taking migrants from Ruritania in the future, when their birth rates are declining, you are also taking in individuals who, in the Beckerian sense, are receiving relatively high quality human capital investments from their parents, at least relative to that country’s past.

The perceptive will note that this argument holds regardless of the absolute number of migrants you might wish to take in, high or low.

Sunday assorted links

1. Is the gene-sequencing company Illumina a monopoly? (NYT)

2. “In short: the more one’s intellectual contributions are defined by strengths, where those strengths also essentially depend on a broad base, the more your regional background is likely to shape your intellectual contributions.” More Nate Meyvis. And Nate on the value of T-shaped reading plans.

4. People on Twitter are using more political identifiers than before. And more yet if you count pronouns.

A Half Dose of Moderna is More Effective Than a Full Dose of AstraZeneca

Today we are releasing a new paper on dose-stretching, co-authored by Witold Wiecek, Amrita Ahuja, Michael Kremer, Alexandre Simoes Gomes, Christopher M. Snyder, Brandon Joel Tan and myself.

The paper makes three big points. First, Khoury et al (2021) just published a paper in Nature which shows that “Neutralizing antibody levels are highly predictive of immune protection from symptomatic SARS-CoV-2 infection.” What that means is that there is a strong relationship between immunogenicity data that we can easily measure with a blood test and the efficacy rate that it takes hundreds of millions of dollars and many months of time to measure in a clinical trial. Thus, future vaccines may not have to go through lengthy clinical trials (which may even be made impossible as infections rates decline) but can instead rely on these correlates of immunity.

Here is where fractional dosing comes in. We supplement the key figure from Khoury et al.’s paper to show that fractional doses of the Moderna and Pfizer vaccines have neutralizing antibody levels (as measured in the early phase I and phase II trials) that look to be on par with those of many approved vaccines. Indeed, a one-half or one-quarter dose of the Moderna or Pfizer vaccine is predicted to be more effective than the standard dose of some of the other vaccines like the AstraZeneca, J&J or Sinopharm vaccines, assuming the same relationship as in Khoury et al. holds. The point is not that these other vaccines aren’t good–they are great! The point is that by using fractional dosing we could rapidly and safely expand the number of effective doses of the Moderna and Pfizer vaccines.

Second, we embed fractional doses and other policies such as first doses first in a SIER model and we show that even if efficacy rates for fractional doses are considerably lower, dose-stretching policies are still likely to reduce infections and deaths (assuming we can expand vaccinations fast enough to take advantage of the greater supply, which is well within the vaccination frontier). For example, a half-dose strategy reduces infections and deaths under a variety of different epidemic scenarios as long as the efficacy rate is 70% or greater.

Third, we show that under plausible scenarios it is better to start vaccination with a less efficacious vaccine than to wait for a more efficacious vaccine. Thus, Great Britain and Canada’s policies of starting First Doses first with the AstraZeneca vaccine and then moving to second doses, perhaps with the Moderna or Pfizer vaccines is a good strategy.

It is possible that new variants will reduce the efficacy rate of all vaccines indeed that is almost inevitable but that doesn’t mean that fractional dosing isn’t optimal nor that we shouldn’t adopt these policies now. What it means is that we should be testing and then adapting our strategy in light of new events like a battlefield commander. We might, for example, use fractional dosing in the young or for the second shot and reserve full doses for the elderly.

One more point worth mentioning. Dose stretching policies everywhere are especially beneficial for less-developed countries, many of which are at the back of the vaccine queue. If dose-stretching cuts the time to be vaccinated in half, for example, then that may mean cutting the time to be vaccinated from two months to one month in a developed country but cutting it from two years to one year in a country that is currently at the back of the queue.

Read the whole thing.

The Becker-Friedman center also has a video discussion featuring my co-authors, Nobel prize winner Michael Kremer and the very excellent Witold Wiecek.

Misdemeanor Prosecution

Misdemeanor Prosecution (NBER) (ungated) is a new, blockbuster paper by Agan, Doleac and Harvey (ADH). Misdemeanor crimes are lesser crimes than felonies and typically carry a potential jail term of less than one year. Examples of misdemeanors include petty theft/shoplifting, prostitution, public intoxication, simple assault, disorderly conduct, trespass, vandalism, reckless driving, indecent exposure, and various drug crimes such as possession. Eighty percent of all criminal justice cases, some 13 million cases a year, are misdemeanors. ADH look at what happens to subsequent criminal behavior when misdemeanor cases are prosecuted versus non-prosecuted. Of course, the prosecuted differ from the non-prosecuted so we need to find situations where for random reasons comparable people are prosecuted and non-prosecuted. Not surprisingly some Assistant District Attorneys (ADAs) are more lenient than others when it comes to prosecuting misdemeanors. ADH use the random assignment of ADAs to a case to tease out the impact of prosecution–essentially finding two similar individuals one of whom got lucky and was assigned a lenient ADA and the other of whom got unlucky and was assigned a less lenient ADA.

We leverage the as-if random assignment of nonviolent misdemeanor cases to Assistant District Attorneys (ADAs) who decide whether a case should move forward with prosecution in the Suffolk County District Attorney’s Office in Massachusetts.These ADAs vary in the average leniency of their prosecution decisions. We find that,for the marginal defendant, nonprosecution of a nonviolent misdemeanor offense leads to large reductions in the likelihood of a new criminal complaint over the next two years.These local average treatment effects are largest for first-time defendants, suggesting that averting initial entry into the criminal justice system has the greatest benefits.

… We find that the marginal nonprosecuted misdemeanor defendant is 33 percentage points less likely to be issued a new criminal complaint within two years post-arraignment (58% less than the mean for complier” defendants who are prosecuted; p < 0.01). We find that nonprosecution reduces the likelihood of a new misdemeanor complaint by 24 percentage points (60%; p < 0.01), and reduces the likelihood of a new felony complaint by 8 percentage points (47%; not significant). Nonprosecution reduces the number of subsequent criminal complaints by 2.1 complaints (69%; p < .01); the number of subsequent misdemeanor complaints by 1.2 complaints (67%; p < .01), and the number of subsequent felony complaints by 0.7 complaints (75%; p < .05). We see significant reductions in subsequent criminal complaints for violent, disorderly conduct/theft, and motor vehicle offenses.

Did you get that? On a wide variety of margins, prosecution leads to more subsequent criminal behavior. How can this be?

We consider possible causal mechanisms that could be generating our findings. Cases that are not prosecuted by definition are closed on the day of arraignment. By contrast, the average time to disposition for prosecuted nonviolent misdemeanor cases in our sample is 185 days. This time spent in the criminal justice system may disrupt defendants’ work and family lives. Cases that are not prosecuted also by definition do not result in convictions, but 26% of prosecuted nonviolent misdemeanor cases in our sample result in a conviction. Criminal records of misdemeanor convictions may decrease defendants’ labor market prospects and increase their likelihoods of future prosecution and criminal record acquisition, conditional on future arrest. Finally, cases that are not prosecuted are at much lower risk of resulting in a criminal record of the complaint in the statewide criminal records system. We find that nonprosecution reduces the probability that a defendant will receive a criminal record of that nonviolent misdemeanor complaint by 55 percentage points (56%, p < .01). Criminal records of misdemeanor arrests may also damage defendants’ labor market prospects and increase their likelihoods of future prosecution and criminal record acquisition, conditional on future arrest. All three of these mechanisms may be contributing to the large reductions in subsequent criminal justice involvement following nonprosecution.

So should we stop prosecuting misdemeanors? Not necessarily. Even if prosecution increases crime by the prosecuted it can still lower crime overall through deterrence. In fact, since there are more people who are potentially deterred than who are prosecuted, general deterrence can swamp specific deterrence (albeit there are 13 million misdemeanors so that’s quite big). The authors, however, have gone some way towards addressing this objection because they combine their “micro” analysis with a “macro” analysis of a policy experiment.

During her 2018 election campaign, District Attorney Rollins pledged to establish a presumption of nonprosecution for 15 nonviolent misdemeanor offenses…After the inauguration of District Attorney Rollins, nonprosecution rates rose not only for cases involving the nonviolent misdemeanor offenses on the Rollins list, but also for those involving nonviolent misdemeanor offenses not on the Rollins list (and for all nonviolent misdemeanor cases)…. the increases in nonprosecution after the Rollins inauguration led to a 41 percentage point decrease in new criminal complaints for nonviolent misdemeanor cases on the Rollins list (not significant), a 47 percentage point decrease in new criminal complaints for nonviolent misdemeanor cases not on the Rollins list (p < .05), and a 56 percentage point decrease in new criminal complaints for all nonviolent misdemeanor cases (p < .05).

It’s unusual and impressive to see multiple sources of evidence in a single paper. (By the way, this paper is also a great model for learning all the new specification tests and techniques in the “leave-out” literature, exogeneity, relevance, exclusion restriction, monotonicity etc. all very clearly described.)

The policy study is a short-term study so we don’t know what happens if the rule is changed permanently but nevertheless this is good evidence that punishment can be criminogenic. I am uncomfortable, however, with thinking about non-prosecution as the choice variable, even on the margin. Crime should be punished. Becker wasn’t wrong about that. We need to ask more deeply, what is it about prosecution that increases subsequent criminal behavior? Could we do better by speeding up trials (a constitutional right that is often ignored!)–i.e. short, sharp punishment such as community service on the weekend? Is it time to to think about punishments that don’t require time off work? What about more diversion to programs that do not result in a criminal record? More generally, people accused and convicted of crimes ought to find help and acceptance in re-assimilating to civilized society. It’s crazy–not just wrong but counter-productive–that we make it difficult for people with a criminal record to get a job and access various medical and housing benefits.

The authors are too sophisticated to advocate for non-prosecution as a policy but it fits with the “defund the police,” and “end cash bail” movements. I worry, however, that after the tremendous gains of the 1990s we will let the pendulum swing back too far. A lot of what counts as cutting-edge crime policy today is simply the mood affiliation of a group of people who have no recollection of crime in the 1970s and 1980s. The great forgetting. It’s welcome news that we might be on the wrong side of the punishment Laffer curve and so can reduce punishment and crime at the same time. But it’s a huge mistake to think that the low levels of crime in the last two decades are a permanent features of the American landscape. We could lose it all in a mistaken fit of moralistic naivete.

Google Trends as a measure of economic influence

That is a new research paper by Tom Coupé, here is one excerpt:

I find that search intensity rankings based on Google Trends data are only modestly correlated with more traditional measures of scholarly impact…

The definition of who counts as an economist is somewhat loose, so:

Plato, Aristotle and Karl Marx constitute the top three. They are followed by B. R. Ambedkar, John Locke and Thomas Aquinas, with Adam Smith taking the seventh place. Smith is followed by Max Weber, John Maynard Keynes and the top-ranking Nobel Prize winner, John Forbes Nash Jr.

…John Forbes Nash Jr., Arthur Lewis, Milton Friedman, Paul Krugman and Friedrich Hayek are the most searched for Nobel Prize winners for economics, while Tjalling Koopmans, Reinhard Selten, Lawrence Klein, James Meade and Dale T. Mortensen have the lowest search intensity.

Here are the Nobelist rankings. Here are the complete rankings, if you are wondering I come in at #104, just ahead of William Stanley Jevons, one of the other Marginal Revolution guys, and considerably ahead of Walras and Menger, early co-bloggers (now retired) on this site. Gary Becker is what…#172? Ken Arrow is #184. The internet is a funny place.

I guess I found this on Twitter, but I have forgotten whom to thank – sorry!

Cybercrime and Punishment

Ye Hong and William Neilson have solved for the equilibrium:

This paper models cybercrime by adding an active victim to the seminal Becker model of crime. The victim invests in security that may protect her from a cybercrime and, if the cybercrime is thwarted, generate evidence that can be used for prosecution. Successful crimes leave insufficient evidence for apprehension and conviction and, thus, cannot be punished. Results show that increased penalties for cybercriminals lead them to exert more effort and make cybercrimes more likely to succeed. Above a threshold they also lead victims to invest less in security. It may be impossible to deter cybercriminals by punishing them. Deterrence is possible, but not necessarily optimal, through punishing victims, such as data controllers or processors that fail to protect their networks.

Via the excellent Kevin Lewis.