Results for “zmp” 73 found

Google searches for inequality (from my email)

From Jared Sleeper:

I was surprised to see this magnitude of effect. Google searches for “inequality” are twice as high during the academic year as they are during the summer. They double in September vs. August and then collapse by 50% in June vs. May.

New issue of Econ Journal Watch

This is now twenty years of Econ Journal Watch, congratulations to Dan Klein! Here is the table of contents:

Volume 20, Number 2, September 2023

Screening the 1915 film The Birth of a Nation: In an article in the American Economic Review, Desmond Ang purports to show causal impact of screenings of the film between 1915 and 1919 on lynchings, on the formation and growth of Ku Klux Klan chapters between 1920 and 1925, and on hate crimes in the early 2000s. Here, concurring that the film itself reeks of racism, Robert Kaestner scrutinizes Ang’s data and analyses, and challenges the claims of causal evidence of effects from 1915–1919 screenings of the film. (Note: Professor Ang was not invited to reply for concurrent publication because Kaestner’s piece was finalized at too late a date. Professor Ang is invited to reply in a future issue.)

Temperature-economic growth claims tested again: Having tested temperature-economic growth claims previously in this journal (here and here), David Barker now reports on his investigation into much-cited articles by Melissa Dell, Benjamin Jones, and Benjamin Olken, published in the American Economic Review in 2009 and the American Economic Journal: Macroeconomics in 2012. As with the two previous pieces by Barker, the commented-on authors have declined to reply (the invitation remains open).

Debating the causes of the Ukraine famine of the early 1930s: Two scholars interpret the complex causes of a tragedy that caused the loss of perhaps three million souls. Natalya Naumenko’s research on the causes of the Ukraine famine is discussed by Mark Tauger, and Naumenko replies.

Ergodicity economics, debated: A number of scholars have advanced an approach to decision making under uncertainty called ergodicity economics. A critique is provided here by Matthew Ford and John Kay, who maintain that psychology is fundamental to any general theory of decision making under uncertainty. Eleven proponents of ergodicity economics have coauthored a reply. They suggest that the critique is based on an incomplete understanding of ergodicity economics, and point to two sources of misunderstanding. The replying authors are Oliver Hulme, Arne Vanhoyweghen, Colm Connaughton, Ole Peters, Simon Steinkamp, Alexander Adamou, Dominik Baumann, Vincent Ginis, Bert Verbruggen, James Price, and Benjamin Skjold.

Dispute resolution on hospitals, communication, and dispute resolution? Previously, Florence R. LeCraw, Daniel Montanera, and Thomas A. Mroz (LMM) criticized the statistical methods of a 2018 article in Health Affairs. Here, Maayan Yitshak-Sade, Allen Kachalia, Victor Novack, and Michelle M. Mello provide a reply to LMM, and LMM provide a rejoinder to them.

Aaron Gamino rejoins on health insurance mandates and the marriage of young adults: Previously, Aaron Gamino commented on the statistical modeling in a 2022 Journal of Human Resources article, whose authors, Scott Barkowski and Joanne Song McLaughlin, replied. Here now Gamino provides a rejoinder.

A History of Classical Liberalism in the Netherlands: Edwin van de Haar narrates the classical liberal movements in the Netherlands, from the Dutch Golden Age, through the 18th, 19th, and 20th centuries, and down to today. The article extends the series on Classical Liberalism in Econ, by Country.

To Russia with love: The conservative liberal Boris Chicherin (1828–1904) addressed his fellow Russians in an 1857 essay “Contemporary Tasks of Russian Life.” Here, the essay is republished by permission of Yale University Press, with a Foreword by the translator Gary Hamburg.

Pierre de Boisguilbert: Prime Extracts and Some Correspondence: The first great exponent of liberal economics in France was Pierre de Boisguilbert (1646–1714). Here, Benoît Malbranque provides English-language readers with a taste of Boisguilbert, and for the first time.

SSRN and medRxiv Censor Counter-narrative Science: Jay Bhattacharya and Steve Hanke detail the experience of three research teams being censored by SSRN and medRxiv. The article also points to a website (link) where scholars can report their experiences of being censored by SSRN, medRxiv, or other preprint servers.

Journal of Accounting Research’s Report on Its Own Research-Misconduct Investigation of an Article It Published: Dan Klein reports and rebukes the journal.

What are your most underappreciated works? Previously, 18 scholars with 4k+ Google Scholar cites pointed to a decade-or-more old paper with cite count below his or her h-index. Now, they are joined by Andrew Gelman, Robert Kaestner, Robert A. Lawson, George Selgin, Ilya Somin, and Alex Tabarrok.

EJW Audio:

Edwin van de Haar on Classical Liberalism in the Netherlands

Paul Robinson on Russian Liberalism

Vlad Tarko and Radu Nechita on Liberalism in Romania, 1829 to 2023

Nathan Labenz on AI pricing

I won’t double indent, these are all his words:

“I agree with your general take on pricing and expect prices to continue to fall, ultimately approaching marginal costs for common use cases over the next couple years.

A few recent data points to establish the trend, and why we should expect it to continue for at least a couple years…

- OpenAI reduced core LLM pricing by 2/3rds last year.

- StabilityAI has recently reduced prices on Stable Diffusion down to a base of $0.002 / image – now you get 500 images / dollar. This is a >90% reduction from OpenAI’s original DALLE2 pricing.

- OpenAI has also recently reduced their embeddings price by 99.8% – not a typo! You can now index all 200M+ papers on Semantic Scholar for $500K-2M, depending on your approach.

- Emad from StabilityAI projects ~1M fold cost improvement over next 10 years – responding to Chamath who had predicted 1000X improvement

Looking ahead…

- continued application of RLHF and similar techniques – these techniques create 100X parameter advantage (already in use in force at OpenAI, Anthropic, and Google – but limited use elsewhere)

- the CarperAI “Open Instruct” project – also affiliated with (part of?) StabilityAI, aims to match OpenAI’s current production models with an open source model, expected in 2023

- 8-bit and maybe even 4-bit inference – simply by rounding weights off to fewer significant digits, you save memory requirements and inference compute costs with minimal performance loss

- pruning for sparsity – turns out some LLMs work just as well if you set 60% of the weights to zero (though this likely isn’t true if you’re using Chinchilla-optimal training)

- mixture of experts techniques – another take on sparsity, allows you to compute only certain dedicated sub-blocks of the overall network, improving speed and cost

- distillation – a technique by which larger, more capable models can be used to train smaller models to similar performance within certain domains – Replit has a great writeup on how they created their first release codegen model in just a few weeks this way!

- distributed training techniques, including approaches that work on consumer devices, and “reincarnation” techniques that allow you to re-use compute rather than constantly re-training from scratch

And this is all assuming that the weights from a leading model never leak – that would be another way things could quickly get much cheaper… ”

TC again: All worth a ponder, I do not have personal views on these specific issues, of course we will see. And here is Nathan on Twitter.

Emergent Ventures Africa and African diaspora, first cohort

Saron Berhane, Australian-Ethiopian living in Ghana, for research in synthetic biology (bio-leather produced using microbes). Previously she was the co-founder of an agriculture technology startup that specializes in real-time airborne disease detection.

Emmanuel Nnadi, Nigerian microbiologist and lecturer at Plateau State University in Bokkos, for research on phage therapies and the development of a phage bank in Nigeria.

Dithapelo Medupe, Botswana medical doctor and Anthropology PhD candidate at UPenn, to support her research on statistical approaches to multilinear evolution in African development and for general career development.

Mercy Muwanguzi, Ugandan high school senior, to support her research on robotics design and development for medical purposes in Kampala. She was jointly awarded the President’s Innovation Award for Science and Innovation.

Nseabasi Akpan, a professional photographer from Ibadan, for promoting photography education to young people in Nigeria.

Colin Clarke, an Astrophysics undergrad in Ireland, for travel to Nairobi to assist in providing astronomy education to rural schools in Kenya with a non-profit called the Travelling Telescope.

Geraud Neema, Congolese policy analyst living in Mauritius, for in-depth research on the domestic policy impact of Africans educated in China and for general career development.

I thank Rasheed Griffith for leading the selection process behind our new branch of Emergent Ventures Africa and African diaspora.

AI for Emergent Ventures

I am pleased to announce the initiation of a new, special tranche of the Emergent Ventures fund to identify and foster artificial intelligence researchers and talent in emerging economies. This tranche is thanks to a special gift from the Schmidt Futures.

Several factors — including credential requirements, long review times, and ageism — deter AI talent from seeking support for their work. EV will use its usual active approach — a super-simple application form, quick turnaround time, and its existing global network — to find both credentialed and uncredentialed talent across the world, especially in emerging economies.

Click the “Apply Now” at the normal Emergent Ventures site and follow the super-simple instructions and note how your project relates to AI as you answer the questionnaire. There is no application deadline for EV-AI, but applicants are encouraged to apply quickly, as funds are limited.

Those unfamiliar with Emergent Ventures can learn more here and here. Here is the announcement.

If you are interested in supporting the AI tranche of Emergent Ventures, please write to me or to Shruti Rajagopalan at [email protected].

I am still unsure how to title this post

Here is the punchline:

“The Department of Chemistry and Biochemistry at UCLA seeks applications for an Assistant Adjunct Professor on a without salary basis. Applicants must understand there will be no compensation for this position.”

At first I was pondering “These wages are not sticky downwards,” and then “These wages are sticky downwards.” Or how about “Tax them anyway”? “Solve for the ZMP equilibrium”?

“One of the few jobs where your payments are inflation linked!“?

What else? Here is the ad, here are some possible explanations, I am not sure if those make it better or worse.

I thank several loyal MR readers for the pointer.

Monday assorted links

1. DeFi research associate job at Mercatus.

2. On decentralization, Vitalik responds to Moxie. And Suzuha responds to Moxie.

3. Paul Brody on that same debate. He says Web 3.0 is too complicated.

4. In Quebec, alcohol store customers need to be vaccinated, but not the employees.

Structural adjustment for thee but not allowed for me

The economy has not bounced back to prepandemic employment levels, even as G.D.P. effectively has.

Some blame unemployment benefits for keeping workers at home, while others claim that it is the virus still holding back customers and therefore employers from adding jobs. Yet there is a third factor that is likely the labor market’s primary challenge: We are undergoing an enormous reallocation of people and jobs. People need time to find their new position in the labor market.

The early hope among policymakers and economists was that the pandemic aid offered to businesses and families would mean that once we recovered from the pandemic, workers would simply return to their old jobs, sending millions back to work each month and closing the employment gap quickly.

The problem is that old jobs are long gone for the vast majority of those who remain unemployed.

That is from Betsey Stevenson (NYT), and I am not taking issue with her arguments. Note that if you look about the debate over 2021 more broadly, pretty much everyone agrees there might be too much AD rather than too little. And yet these matching problems are still around? Hmm….once you are in a mess, supply-side labor adjustment problems just cannot be fixed so easily by nominal demand and nominal demand only. See my earlier recent post on this point, namely that business cycle recoveries tend to look the same on the labor side for supply-side reasons. During recoveries a lot of people just don’t want to go back to work or even look for a job! That was true in the last recession as well, read this paper, or this research. People hate the idea if you call them ZMP, but it’s right there in the numbers…how can someone be MP > 0 if they won’t even show up for an interview?

You might notice, by the way, I am not a huge fan of the NAIRU concept and you won’t see me cite it very often (occasionally it is useful shorthand for a less controversial concept.) The following notion, however, is well-defined: “What the rate of unemployment would be if there were no major negative shocks for a decade and people had seven, eight, or even more years to search for the right job match.” Yes that is indeed a well-defined number, and that number is pretty low. I’m just not sure that is very “natural.” What would John Gray say? The Marquis de Sade?

Thursday assorted links

1. One of these people is an abysmal reasoner. Kudos to you if you can avoid those more general fallacies across a broader range of settings.

3. James Brown concert Paris 1968, starts a bit slow keeps on getting better.

4. Thwarted markets in everything: “Smuggler found with nearly 1,000 cacti and succulents strapped to her body.” New Zealand, of course.

5. New Senate bill would punish Big Tech for not snitching, and would attempt to monitor communications.

6. The more successful vaccinating states are keeping it simple. I wonder if above-average use of digital technology correlates with the more negative results.

7. “We need to think about the real world of ZMP, not the imaginary world of neoclassical equilibrium.”

8. Minnesota budget forecast is for $641 million surplus. And Pearlstein tells the truth about the stimulus.

Wednesday assorted links

1. Don’t let them tell you that ZMP workers are not for real.

2. Why was vaccination so much faster in 1947?

3. A new mRNA vaccine for multiple sclerosis?

4. “Business is the only institution that is now perceived as being both ethical and competent enough to solve the world’s problems.” Full study here.

5. Attempted Quebec regulatory arbitrage: “Quebec Woman Fined For Putting Leash on Her Partner, Taking Him for a Walk.”

The YIMBYs win one, in the UK

The government is determined all the same, in keeping with the prime minister’s desire to “build, build, build”, to loosen our restrictive planning system. His proposed reforms will curb the ability of local politicians to slow down plans that have received initial approval. The requirements for developers to include cheaper housing on their sites will be relaxed. Land will be split into the three categories of growth, renewal, and preservation. Any school, shop or office which meets local design standards will be given an assumed permission to develop in the first two of these three categories. The aim will be for each area to agree a local plan in 30 months rather than the current average of seven years.

Here is more from Phillip Collins at the London Times (gated). Do any of you know of a good ungated link on this? Here is The Guardian, in unsurprising fashion, siding with NIMBY. So far the BBC just doesn’t seem that interested. Anywhere else to look?

Addendum: From Conor, here are some links:

– Via CapX, which is a great aggregator plus original commentary: https://capx.co/these-profound-reforms-offer-a-chance-to-build-more-and-build-more-beautifully/

– Via Conservative Home, here is the housing lead at Policy Exchange: https://www.conservativehome.com/platform/2020/08/ben-southwood-yes-the-current-planning-system-really-is-at-the-root-of-britains-housing-crisis.html

– Twitter thread from Adam Smith Institute’s Matthew Lesh: https://twitter.com/matthewlesh/status/1291368548590379008

– A summary and link to the full report itself: https://www.gov.uk/government/news/launch-of-planning-for-the-future-consultation-to-reform-the-planning-system

And longer reads, here are a couple of policy papers from the past that helped inform this report:

– Policy Exchange paper: https://policyexchange.org.uk/wp-content/uploads/Rethinking-the-Planning-System-for-the-21st-Century.pdf

– The Roger Scruton chaired Building Better, Building Beautiful Commission: https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/861832/Living_with_beauty_BBBBC_report.pdf

Ocean Grove, New Jersey travel notes

Having not visited the New Jersey shore since I was a kid (and then a very regular visitor), I realized you cannot actually swim there with any great facility. Nor is there much to do, nor should one look forward to the food.

Nonetheless Ocean Grove is one of America’s finest collections of Victorian homes, and the town style is remarkably consistent and intact. Most of all, it is an “only in America” kind of place:

Ocean Grove was founded in 1869 as an outgrowth of the camp meeting movement in the United States, when a group of Methodist clergymen, led by William B. Osborn and Ellwood H. Stokes, formed the Ocean Grove Camp Meeting Association to develop and operate a summer camp meeting site on the New Jersey seashore. By the early 20th century, the popular Christian meeting ground became known as the “Queen of Religious Resorts.” The community’s land is still owned by the camp meeting association and leased to individual homeowners and businesses. Ocean Grove remains the longest-active camp meeting site in the United States.

The pipe organ in the 19th century Auditorium is still one of the world’s twenty largest.

The Auditorium is closed at the moment, but they still sing gospel music on the boardwalk several times a night.

The police department building is merged together with a Methodist church, separate entrances but both under the same roof.

Ocean Grove remains a fully dry city, for the purpose of “keeping the riff-raff out,” as one waitress explained to me. To walk up the Ocean Grove boardwalk into nearby Asbury Park (Cuban and Puerto Rican and Haitian in addition to American black) remains a lesson in the economics of sudden segregation, deliberate and otherwise.

Based on my experience as a kid, I recall quite distinct “personae” for the adjacent beach towns of Asbury Park, Ocean Grove, Bradley Beach, Seaside Heights, Lavalette, Belmar, Spring Lake, and Point Pleasant. This time around I did not see much cultural convergence. That said, Ocean Grove now seems less the province of the elderly and more of a quiet upscale haunt, including for gay couples. As an eight-year-old, it was my least favorite beach town on the strip. Fifty years later, it is now striking to me how much the United States is refusing to be all smoothed over and homogenized.

The demise of the happy two-parent family

Here is new work by Rachel Sheffield and Scott Winship, I will not impose further indentation:

“- We argue, against conventional wisdom on the right, that the decades of research on the effects of single parenthood on children amounts to fairly weak evidence that kids would do better if their actual parents got or stayed married. That is not to say that that we think single parenthood isn’t important–it’s a claim about how persuasive we ought to find the research on a question that is extremely difficult to answer persuasively. But even if it’s hard to determine whether kids would do better if their unhappy parents stay together, it is close to self-evident (and uncontroversial?) that kids do better being raised by two parents, happily married.

– We spend some time exploring the question of whether men have become less “marriageable” over time. We argue that the case they have is also weak. The pay of young men fell over the 1970s, 1980s, and early 1990s. But it has fully recovered since. You can come up with other criteria for marriageability–and we show several trends using different criteria–but the story has to be more complicated to work. Plus, if cultural change has caused men to feel less pressure to provide for their kids, then we’d expect that to CAUSE worse outcomes in the labor market for men over time. The direction of causality could go the other way.

– Rather than economic problems causing the increase in family instability, we argue that rising affluence is a better explanation. Our story is about declining co-dependence, increasing individualism and self-fulfillment, technological advances, expanded opportunities, and the loosening of moral constraints. We discuss the paradox that associational and family life has been more resilient among the more affluent. It’s an argument we advance admittedly speculatively, but it has the virtue of being a consistent explanation for broader associational declines too. We hope it inspires research hypotheses that will garner the kind of attention that marriageability has received.

– The explanation section closes with a look at whether the expansion of the federal safety net has affected family instability. We acknowledge that the research on select safety net program generosity doesn’t really support a link. But we also show that focusing on this or that program (typically AFDC or TANF) misses the forest. We present new estimates showing that the increase in safety net generosity has been on the same order of magnitude as the increase in nonmarital birth rates.

– Finally, we describe a variety of policy approaches to address the increase in family stability. These fall into four broad buckets: messaging, social programs, financial incentives, and other approaches. We discuss 16 and Pregnant, marriage promotion programs, marriage penalties, safety net reforms, child support enforcement, Career Academies, and other ideas. We try to be hard-headed about the evidence for these proposals, which often is not encouraging. But the issue is so important that policymakers should keep trying to find effective solutions.”

A highly qualified reader emails me on heterogeneity

I won’t indent further, all the rest is from the reader:

“Some thoughts on your heterogeneity post. I agree this is still bafflingly under-discussed in “the discourse” & people are grasping onto policy arguments but ignoring the medical/bio aspects since ignorance of those is higher.

Nobody knows the answer right now, obviously, but I did want to call out two hypotheses that remain underrated:

1) Genetic variation

This means variation in the genetics of people (not the virus). We already know that (a) mutation in single genes can lead to extreme susceptibility to other infections, e.g Epstein–Barr (usually harmless but sometimes severe), tuberculosis; (b) mutation in many genes can cause disease susceptibility to vary — diabetes (WHO link), heart disease are two examples, which is why when you go to the doctor you are asked if you have a family history of these.

It is unlikely that COVID was type (a), but it’s quite likely that COVID is type (b). In other words, I expect that there are a certain set of genes which (if you have the “wrong” variants) pre-dispose you to have a severe case of COVID, another set of genes which (if you have the “wrong” variants) predispose you to have a mild case, and if you’re lucky enough to have the right variants of these you are most likely going to get a mild or asymptomatic case.

There has been some good preliminary work on this which was also under-discussed:

- NEJM paper studied Italy and Spain and found that genes controlling blood type predicted disease severity. https://www.nejm.org/doi/full/10.1056/NEJMoa2020283 (NIH director had a blog post about it with layperson’s explanation here https://directorsblog.nih.gov/2020/06/18/genes-blood-type-tied-to-covid-19-risk-of-severe-disease/)

- NYTimes covered a follow-up paper showing that this varies by ethnicity, and in particular ~60% of South Asians carry these genes compared to only 4% of East Asians and almost no Africans. https://www.nytimes.com/2020/07/04/health/coronavirus-neanderthals.html

You will note that the majority of doctors/nurses who died of COVID in the UK were South Asian. This is quite striking. https://www.nytimes.com/2020/04/08/world/europe/coronavirus-doctors-immigrants.html — Goldacre et al’s excellent paper also found this on a broader scale (https://www.medrxiv.org/content/10.1101/2020.05.06.20092999v1). From a probability point of view, this alone should make one suspect a genetic component.

There is plenty of other anecdotal evidence to suggest that this hypothesis is likely as well (e.g. entire families all getting severe cases of the disease suggesting a genetic component), happy to elaborate more but you get the idea.

Why don’t we know the answer yet? We unfortunately don’t have a great answer yet for lack of sufficient data, i.e. you need a dataset that has patient clinical outcomes + sequenced genomes, for a significant number of patients; with this dataset, you could then correlate the presences of genes {a,b,c} with severe disease outcomes and draw some tentative conclusions. These are known as GWAS studies (genome wide association study) as you probably know.

The dataset needs to be global in order to be representative. No such dataset exists, because of the healthcare data-sharing problem.

2) Strain

It’s now mostly accepted that there are two “strains” of COVID, that the second arose in late January and contains a spike protein variant that wasn’t present in the original ancestral strain, and that this new strain (“D614G”) now represents ~97% of new isolates. The Sabeti lab (Harvard) paper from a couple of days ago is a good summary of the evidence. https://www.biorxiv.org/content/10.1101/2020.07.04.187757v1 — note that in cell cultures it is 3-9x more infective than the ancestral strain. Unlikely to be that big of a difference in humans for various reasons, but still striking/interesting.

Almost nobody was talking about this for months, and only recently was there any mainstream coverage of this. You’ve already covered it, so I won’t belabor the point.

So could this explain Asia/hetereogeneities? We don’t know the answer, and indeed it is extremely hard to figure out the answer (because as you note each country had different policies, chance plays a role, there are simply too many factors overall).

I will, however, note that this the distribution of each strain by geography is very easy to look up, and the results are at least suggestive:

- Visit Nextstrain (Trevor Bedford’s project)

- Select the most significant variant locus on the spike protein (614)

- This gives you a global map of the balance between the more infective variant (G) and the less infective one (D) https://nextstrain.org/ncov/global?c=gt-S_614

- The “G” strain has grown and dominated global cases everywhere, suggesting that it really is more infective

- A cursory look here suggests that East Asia mostly has the less infective strain (in blue) whereas rest of the world is dominated by the more infective strain:

-

– Compare Western Europe, dominated by the “yellow” (more infective) strain:

You can do a similar analysis of West Coast/East Coast in February/March on Nextstrain and you will find a similar scenario there (NYC had the G variant, Seattle/SF had the D).

Again, the point of this email is not that I (or anyone!) knows the answers at this point, but I do think the above two hypotheses are not being discussed enough, largely because nobody feels qualified to reason about them. So everyone talks about mask-wearing or lockdowns instead. The parable of the streetlight effect comes to mind.”

The Lesson of the Spoons

In a story beloved by economists it’s said that Milton Friedman was once visiting China when he was shocked to see that, instead of modern tractors and earth movers, thousands of workers were toiling away building a canal with shovels. He asked his host, a government bureaucrat, why more machines weren’t being used. The bureaucrat replied, “You don’t understand. This is a jobs program.” To which Milton responded, “Oh, I thought you were trying to build a canal. If it’s jobs you want, you should give these workers spoons, not shovels!”

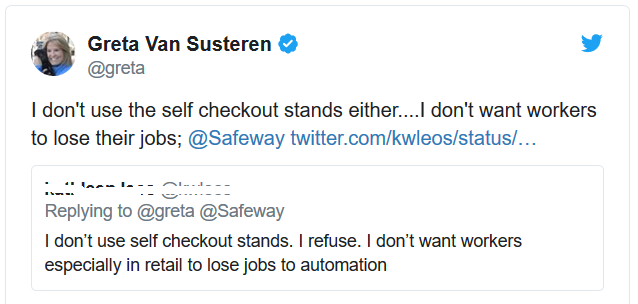

A funny story but one I was reminded of by Greta van Susteren’s not so funny tweet.

Bear in mind that Van Susteren has 1.2 million followers and, according to Forbes, is the 94th most powerful woman in the world.

Of course, there is something odd about using advanced technology to do a job that could be done by millions of immigrants who would be quite happy for the work, but Van Susteren is also against immigration.

Is there anything to be said for banning automation in low-skilled work? Let’s be charitable and assume that there is a problem with not enough work for low-skill workers. It’s unlikely that the best way to address this problem is by banning improvements in productivity. Which sectors are to be artificially restrained and by how much? Should fast checkout workers be banned? Should we prevent customers from walking the aisles and filling their own shopping carts? Remember, self-selection of goods was also once an innovation. As Friedman pointed out, it’s all too easy to reduce productivity.

To the extent that low-skill workers can’t find work (i.e. ZMP workers) the appropriate policy is a wage subsidy as Nobelist Edmund Phelps has suggested (see also the MRU video and Oren Cass on wage subsidies). A wage subsidy is better targeted than the Luddite smashing of machines and because it doesn’t prevent productivity from growing it makes for greater wealth to support the subsidy.