The Google-Trolley Problem

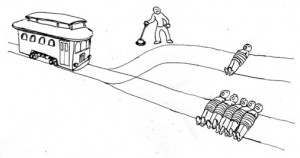

As you probably recall, the trolley problem concerns a moral dilemma. You observe an out-of-control trolley hurtling towards five people who w ill surely die if hit by the trolley. You can throw a switch and divert the trolley down a side track saving the five but with certainty killing an innocent bystander. There is no opportunity to warn or otherwise avoid the disaster. Do you throw the switch?

ill surely die if hit by the trolley. You can throw a switch and divert the trolley down a side track saving the five but with certainty killing an innocent bystander. There is no opportunity to warn or otherwise avoid the disaster. Do you throw the switch?

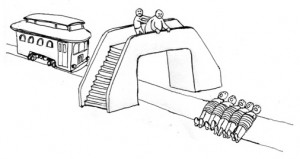

A second version is where you stand on a bridge with a fat man. The only way to stop the trolling killing five is to push the fat man in front of the trolley. Do you do so? Some people say no to both and many say yes to switching but no to pushing, referring to errors of omission and commission. You can read about the moral psychology here.

A second version is where you stand on a bridge with a fat man. The only way to stop the trolling killing five is to push the fat man in front of the trolley. Do you do so? Some people say no to both and many say yes to switching but no to pushing, referring to errors of omission and commission. You can read about the moral psychology here.

I want to ask a different question. Suppose that you are a programmer at Google and you are tasked with writing code for the Google-trolley. What code do you write? Should the trolley divert itself to the side track? Should the trolley run itself into a fat man to save five? If the Google-trolley does run itself into the fat man to save five should Sergey Brin be charged? Do your intuitions about the trolley problem change when we switch from the near view to the far (programming) view?

I think these questions are very important: Notice that the trolley problem is a thought experiment but the Google-trolley problem is a decision that now must be made.