Why Do Experiments Make People Uneasy?

People were outraged in 2014 when Facebook revealed that it had run “psychological experiments” on its users. Yet Facebook changes the way it operates on a daily basis and few complain. Indeed, every change in the way that Facebook operates is an A/B test in which one arm is never run, yet people object to A/B tests but not to either A or B for everyone. Why?

In an important and sad new paper Meyer et al. show in a series of 16 tests that unease with experiments is replicable and general. The authors, for example, ask 679 people in a survey to rate the appropriateness of three interventions designed to reduce hospital infections. The three interventions are:

-

Badge (A): The director decides that all doctors who perform this procedure will have the standard safety precautions printed on the back of their hospital ID badges.

-

Poster (B): The director decides that all rooms where this procedure is done will have a poster displaying the standard safety precautions.

- A/B: The director decides to run an experiment by randomly assigning patients to be treated by a doctor wearing the badge or in a room with the poster. After a year, the director will have all patients treated in whichever way turns out to have the highest survival rate.

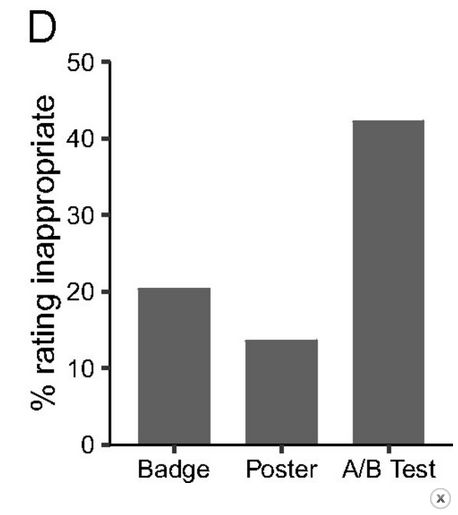

It’s obvious to me that the A/B test is much better than either A or B and indeed the authors even put their thumb on the scales a bit because the A/B scenario specifically mentions the positive goal of learning. Yet, in multiple samples people consistently rate the A/B scenario as more inappropriate than either A or B (see Figure at right).

It’s obvious to me that the A/B test is much better than either A or B and indeed the authors even put their thumb on the scales a bit because the A/B scenario specifically mentions the positive goal of learning. Yet, in multiple samples people consistently rate the A/B scenario as more inappropriate than either A or B (see Figure at right).

Why do people do this? One possibility is that survey respondents have some prejudgment about whether the Badge or Poster is the better approach and so those who think Badge is better rate the A/B test as inappropriate as do those who think Poster is better. To examine this possibility the authors ask about a doctor who prescribes all of his patients Drug A or all of them Drug B or who randomizes for a year between A and B and then chooses. Why anyone would think Drug A is better than Drug B or vice-versa is a mystery but once again the A/B experiment is judged more inappropriate than prescribing Drug A or Drug B to everyone.

Maybe people don’t like the idea that someone is rolling dice to decide on medical treatment. In another experiment the authors describe a situation where some Doctors prescribe Drug A and others prescribe Drug B but which drug a patient receives depends on which doctor is available at the time the patient walks into the clinic. Here no one is rolling dice and the effect is smaller but respondents continue to rate the A/B experiment as more inappropriate.

The lack of implied consent does bother people but only in the explicit A/B experiment and hardly ever in the implicit all A or all B experiments. The authors also show the effect persists in non-medical settings.

One factor which comes out of respondent comments is that the experiment forces people to reckon with the idea that even experts don’t know what the right thing to do is and that confession of ignorance bothers people. (This is also one reason why people may prefer pundits who always “know” the right thing to do even when they manifestly do not).

Surprisingly and depressingly, having a science degree does not solve the problem. In one sad experiment the authors run the test at an American HMO. Earlier surveys had found huge support for the idea that the HMO should engage in “continuous learning” and that “a learning health system is necessary to provide safe, effective, and beneficial patient-centered care”. Yet when push came to shove, exactly the same pattern of accepting A or B but not an A/B test was prevalent.

Unease with experiments appears to be general and deep. Widespread random experiments are a relatively new phenomena and the authors speculate that unease reflects lack of familiarity. But why is widespread use of random experiments new? In an earlier post, I wrote about ideas behind their time, ideas that could have come much earlier but didn’t. Random experiments could have come thousands of years earlier but didn’t. Thus, I think the authors have got the story backward. Random experiments generate unease not because they are new, they are new because they generate unease.

Our reluctance to conduct experiments burdens us with ignorance. Understanding and overcoming experiment-unease is an important area for experimental research. If we can overcome our unease.