Category: Economics

How to make GPT worse at microeconomics

Somehow missed this the first time I looked, but GPT-4 got *significantly worse* at microeconomics after it was trained to tell you what you want to hear. pic.twitter.com/8tDtTwf1II

— Ben Levinstein (@ben_levinstein) March 22, 2023

Microeconomics has gone up in my eyes…

The Great Digital Divide: Panic at Twitter Speed, Respond at AOL Speed

In The New Madness of Crowds I argued that SVB failed because “Greater transparency and lower transaction costs have intensified the madness of the masses and expanded their reach.” A piece by Miao, Zuckerman and Eisen in the WSJ now adds to to the other side of the problem. Depositors were working on twitter time, the regulatory apparatus was not.

Depositors were draining their accounts via smartphone apps and telling their startup networks to do the same. But inside Silicon Valley Bank, executives were trying to navigate the U.S. banking system’s creaky apparatus for emergency lending and to persuade its custodian bank to stay open late to handle a multibillion-dollar transfer.

As Matt Levine summarizes:

Instead of hearing a rumor at the coffee shop and running down to the bank branch to wait on line to withdraw your money, now you can hear a rumor on Twitter or the group chat and use an app to withdraw money instantly. A tech-friendly bank with a highly digitally connected set of depositors can lose 25% of its deposits in hours, which did not seem conceivable in previous eras of bank runs.

But the other part of the problem is that, while depositors can panic faster and banks can give them their money faster, the lender-of-last-resort system on which all of this relies is still stuck in a slower, more leisurely era. “When the user interface improves faster than the core system, it means customers can act faster than the bank can react,” wrote Byrne Hobart. You can panic in an instant and withdraw your money with an app, but the bank can’t get more money without a series of phone calls and test trades that can only happen during regular business hours.

It’s not obvious whether the right thing to do is slow down depositors, at least in some circumstances, or speed up regulators but the two systems can’t work well at different speeds.

In Praise of the Danish Mortgage System

When interest rates go up, the price of bonds goes down. As Tyler and I discuss in Modern Principles, the inverse relationship between interest rates and prices holds for any asset that pays out over time. In particular, as Patrick McKenzie points out, when interest rates go up, the value of a loan goes down. McKenzie suggests that you can use this fact to buy back your mortgage from a bank when interest rates rise.

For example, suppose you get a 500k 30-year fixed rate mortgage when interest rates are 3%–that loan obligates you to pay $2108 per month for 30 years. Now suppose that interest rates go to 6%, now that same stream of payments is only worth, in present value, about $358k. Thus, the bank should be willing to let you buy your mortgage for $358k–that is, after all, what the market would pay for such a stream of payments if your mortgage was securitized.

I am skeptical that I could find the right person at the right bank to actually authorize a deal like this but it turns out that the Danish mortgage system is built to allow this relatively easily. The Danish mortgage system is built on the match principle:

JYSKE Bank: The match-funding principle entails that for every loan made by the mortgage bank, a new bond is issued with matching cash-flow properties. This eliminates mismatches in cash-flows and refinancing risk for the mortgage bank, which also secures payments for the bondholder. In the Danish mortgage system the mortgage bank functions as an intermediary between the investor and borrower. Mortgage banks fund loans on a current basis, meaning that the bond must be sold before the loan can be given. This also entails that the market price of the bond determines the loan rate. The loan is therefore equal to the investment, which passes through the mortgage bank.

In essence, in the Danish system, mortgage banks are more like a futures clearinghouse or a platform (ala Airbnb) than a lender–they take on some credit risk but not interest rate risk.

Thus, if a Danish borrower takes out a 500k mortgage at 3% interest and then rates rise to 6%, the value of that mortgage falls to $358k and the borrower could go to the market, buy their own mortgage, deliver it to the bank, and, in this way, extinguish the loan. Since the value of homes also falls as interest rates rise this is also a neat bit of insurance. Remarkable!

The Danish mortgage market appears to be very successful and so may be a model for American reform:

JYSKE Bank: The Danish Mortgage Bond Market is one of the oldest and most stable in the world, tracing its roots all the way back to 1797 with no records of defaults since inception. Furthermore, the market value of the Danish Mortgage Bond Market is approx. EUR 402bn, making it the largest mortgage bond market in Europe.

“This banking crisis won’t wreck the economy”

Here is my latest Bloomberg column, penned on Sunday, these days the VIXes are back down to normal ranges. Here is one excerpt:

One reason for (relative) optimism is simply that the world, and policymakers, have been preparing for this scenario for some time. Not only do memories of 2008-2009 remain fresh, but we are coming out of a pandemic that in macroeconomic terms induced unprecedented policy reactions in most countries. Before 2008, in contrast, macroeconomic peace had reigned and there was common talk of “ the great moderation,” meaning that the business cycle might be a thing of the past. We now know that view is absurdly wrong.

Circa 2023, we can plausibly expect further disruptions and macroeconomic problems. But this time around the element of surprise is going to be missing, and that should limit the potential for a true financial sector explosion.

The kinds of bank financial problems we are facing also lend themselves to relatively direct solutions. Higher interest rates do mean that the bonds and other assets that many banks hold have lower values, which in turn could imply liquidity and solvency problems. But those underlying financial assets usually are set to pay off their nominal values as expected, as with the government securities held by Silicon Valley Bank. That makes it easier for the Federal Reserve or government to arrange purchases of a failed institution, or to offer discount window borrowing. The losses are relatively transparent and easy to manage, at least compared to 2008-2009, and in most cases repayment is assured, even if those cash flows have lower expected values today, due to higher discount rates.

And:

The various bailouts we have been engaging in are not costless. For instance, they may induce greater moral hazard problems the next time around. But that does not mean we should expect a spectacular financial crash right now. More likely, we will see increases in deposit insurance premiums and also higher capital requirements for financial institutions. The former will fund the current bailouts, and the latter will aim to limit such bailouts in the future. The actual consequences will be a bleeding of funds from the banking system, tighter credit for regional and local lending, and slower rates of economic growth, especially for small and mid-sized firms. Those are reasons to worry, but they do not portend explosive problems right now.

In short, the rational expectation is that the US will muddle through its current problems and patch up the present at the expense of the future. For better or worse, that is how we deal with most of our crises. We hope that America’s innovativeness and strong talent base will make those future problems manageable.

Of course if I am wrong, we will know pretty soon.

My podcast with Bari Weiss, she interviews me

Titled “Bank runs, crypto scams, and world-transforming AI,” plus a round of overrated vs. underrated, among other topics, including inflation.

Lots of fun, I feel Bari and I always have very good energy together…

Time Passages

Here’s an interesting idea it wouldn’t have occured to me to ask. What is the length of time described in the average 250 words of narration and how has this changed over time? Most famously James Joyce’s “Ulysses” is a long novel about single day with many pages describing brief experiences in minute detail. In contrast, Olaf Stapledon’s Last and First Men covers 2 billion years in fewer words than Joyce uses to cover a single day.

Using human readers grading 1000 passages, Underwood et al. (2018) finds that the average length of time described in a typical passage has declined substantially since the 1700s, from a day to about an hour so a decline by a factor of 24. Writers have become much more focused on describing individual experiences than events.

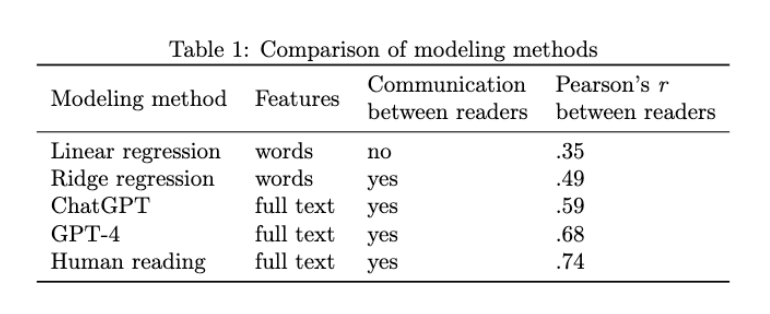

In a blog post, Underwood revisits his earlier work but this time comparing human readers with various statistical techniques and also GPT. To use GPT he prompts

Read the following passage of fiction. Then do five things. 1: Briefly summarize the passage. 2: Reason step by step to decide how much time is described in the passage. If the passage doesn’t include any explicit reference to time, you can guess how much time the events described would have taken. Even description can imply the passage of time by describing the earlier history of people or buildings. But characters’ references to the past or future in spoken dialogue should not count as time that passed in the scene. Report the time using units of years, weeks, days, hours, or minutes. Do not say zero or N/A. 3: If you described a range of possible times in step 2 take the midpoint of the range. Then multiply to convert the units into minutes. 4: Report only the number of minutes elapsed, which should match the number in step 3. Do not reply N/A. 5: Given the amount of speculation required in step 2, describe your certainty about the estimate–either high, moderate, or low. The passage follows: <omitting this to save space>

Here’s an example of the analysis:

ABRIDGED PASSAGE FROM Jane Eyre: And signs, for aught we know, may be but the sympathies of Nature with man. When I was a little girl, only six years old, I one night heard Bessie Leaven say to Martha Abbot that she had been dreaming about a little child … Of late I had often recalled this saying and this incident; for during the past week scarcely a night had gone over my couch that had not brought with it a dream of an infant, which I sometimes hushed in my arms, sometimes dandled on my knee, sometimes watched playing with daisies on a lawn, or again, dabbling its hands in running water. It was a wailing child this night, and a laughing one the next: now it nestled close to me, and now it ran from me; but whatever mood the apparition evinced, whatever aspect it wore, it failed not for seven successive nights to meet me the moment I entered the land of slumber.

ASSISTANT REPLIES.

1: The narrator recalls a saying about dreaming of children being a sign of trouble and remembers an incident from her childhood. She then describes her recent dreams of infants over the past week.

2: The passage mentions the narrator’s childhood memory, which happened when she was six years old, and the past week of dreams. The time elapsed in the passage is the week of dreams.

3: 1 week has elapsed. At seven days a week that’s 7 days. 24 hours in a day, so 168 hours. 60 minutes in an hour, so 10080 minutes.

4: 10080 minutes.

5: Moderate confidence, as the childhood memory is not counted as time passing in the scene.

Overall, Underwood finds that GPT-4 is more accurate than previous statistical techniques in that its judgments correlate with those of human readers nearly as much as human readers correlate with themselves.

Addendum: See also my paper with Tyler on how to use GPT models.

Complexity and time

We provide experimental evidence that core intertemporal choice anomalies — including extreme short-run impatience, structural estimates of present bias, hyperbolicity and transitivity violations — are driven by complexity rather than time or risk preferences. First, all anomalies also arise in structurally similar atemporal decision problems involving valuation of iteratively discounted (but immediately paid) rewards. These computational errors are strongly predictive of intertemporal decisions. Second, intertemporal choice anomalies are highly correlated with indices of complexity responses including cognitive uncertainty and choice inconsistency. We show that model misspecification resulting from ignoring behavioral responses to complexity severely inflates structural estimates of present bias.

That is from a new NBER working paper by Benjamin Enke, Thomas Graeber, and Ryan Oprea.

The New Madness of Crowds

USDC and USDT are two well-known stablecoins. USDC is fully backing by safe, liquid assets, which are verified monthly by a major U.S. accounting firm under the scrutiny of U.S. state regulators. USDT (Tether) is an unregulated stablecoin with questionable asset backing and opaque operations, founded by an actor from the Mighty Ducks and supported by a bank established by one of the creators of Inspector Gadget.

Yet, when Silicon Valley Bank (SVB) went into crisis, USDC broke the peg, and people fled to the nutty, opaque, unregulated Inspector Gadget backed coin.

(USDC is in blue and measured on the right axis and spiked below par, USDT is in red and measured on the left axis and spiked over par.)

Now, this is in some sense “explainable”. USDC kept some money at SVB and Tether (probably) did not. Matthew Zeitlin, channeling Matt Levine, put it this way:

One problem with being transparently and fully backed is that sometimes your investors can transparently see how much of your assets are in a bank that went bottom up, Tether does not have this problem.

SVB’s troubles stemmed from its investments in long-term government bonds, which dropped in value as interest rates rose. However, the bank’s fundamentals were not that dire. If no one had panicked, SVB could probably have paid off all its depositors in the ordinary course of business. The problem happened because some investors saw information they thought others might interpret negatively, prompting them to withdraw their funds. This led others to believe the information was indeed bad, validating the initial belief and causing a massive $42 billion withdrawal in a single day. Had transparency been less and transaction costs more, this wouldn’t have happened and, quite possibly, everything would have been fine.

Indeed, in the past, banks probably become insolvent on a mark-to-market basis but few people noticed. Today, a bank dips below the line and depositors are heading to the door.

Indeed, in the past, banks probably become insolvent on a mark-to-market basis but few people noticed. Today, a bank dips below the line and depositors are heading to the door.

SVB’s fundamentals may have been worse than I believe, poor management undoubtedly played a role. But fundamentals aren’t driving the boat; the boat is being driven by sunspots, memes, and vibes. Tether’s fundamentals are much worse than SVBs ever were. And USDC was even less imperiled than SVB, yet people ran to Tether. Why? Because there wasn’t a Tether sunspot. But be careful. Tether’s stability doesn’t mean that its fundamentals are strong. Not even close. Stability doesn’t mean good fundamentals and instability doesn’t mean bad fundamentals. The mad crowd is capricious. Tether’s time is coming, but no one knows what will spark the fire.

Greater transparency and lower transaction costs have intensified the madness of the masses and expanded their reach. From finance to politics and culture, no domain remains untouched by the new madness of crowds.

Hat tip: Connor Tabarrok and Max Tabarrok.

What is the single best way of improving your GPT prompts?

I have a nomination, and here is an excerpt from my new paper with Alex:

You often can get a better and more specific answer by asking for an answer in the voice of another person, a third party. Here goes: What are the causes of inflation, as it might be explained by Nobel Laureate Milton Friedman?

By mentioning Friedman you are directing the GPT to look at a more intelligent segment of the potential answer space and this directing will usually get you a better answer than if you just ask “What are the causes of inflation?” Similarly, you want all of the words used in your query to be intelligent-sounding. Of course, you may not agree with the views of Friedman on inflation. Here are a few economists who are well known and have written a lot on a wide variety of issues:

Paul Samuelson

Milton Friedman

Susan Athey

Paul Krugman

Tyler Cowen

Alex TabarrokBut you don’t have to memorize that list, and it is not long enough anyway. When in doubt, ask GPT itself who might be the relevant experts. How about this?: “I have a question on international trade. Which economists in the last thirty years might be the smartest experts on such questions?” The model will be very happy to tell you, and then you can proceed with your further queries.

Of course this advice generalizes far beyond economics. A friend of mine queried GPT-4 about Jon Fosse, a Norwegian author, and received a wrong answer. He retried the same question, but asking also for an answer from a Fosse expert. The response was then very good.

The title of my paper with Alex is “How to Learn and Teach Economics with Large Language Models, Including GPT,” but again most of the advice is generalizable to education with GPTs more generally. Recommended, the paper is full of tips for using GPT models in more effective ways.

Imagine if humanity ends up divided into two classes of people: those who are willing/not embarrassed to tack on extra “silly bits” to their prompts, and those who are not so willing. The differences in capabilities will end up being remarkable. Are perhaps many elites and academics unwilling to go the extra mile in their prompts? Do they feel a single sentence question ought to be enough? Are they in any case constitutionally unused to providing extra context for their requests?

Time will tell.

Lots of announcements from Glen Weyl

Today may be the most important/culminating of my professional life. Together with dozens of colleagues and collaborators, I’m releasing/launching a series of papers, initiatives and other work. Thanks especially to one of my favorite journalists, @RanaForoohar,

— ⿻(((E. Glen Weyl/衛谷倫))) 🇺🇸/🇩🇪/🇹🇼 🖖 (@glenweyl) March 19, 2023

Chat Law Goes Global

PricewaterhouseCoopers (PWC), the global business services firm, has signed a deal with OpenAI for access to “Harvey”, OpenAI’s Chatbot for legal services.

Reuters: PricewaterhouseCoopers said Wednesday that it will give 4,000 of its legal professionals access to an artificial intelligence platform, becoming the latest firm to introduce generative AI technology for legal work.

PwC said it partnered with AI startup Harvey for an initial 12-month contract, which the accounting and consulting firm said will help lawyers with contract analysis, regulatory compliance work, due diligence and other legal advisory and consulting services.

PwC said it will also determine ways for tax professionals to use the technology.

IBM’s Watson was a failure so we will see but, yeah I will say it, this time feels different. For one, lawyers deal with text where GPTs excel. Second, GPTs have already revolutionized software coding and unlike Watson I am using GPTs every day for writing and researching and it works. The entire world of white collar work is going to be transformed over the next year. See also my paper with Tyler, How to Learn and Teach Economics with Large Language Models, Including GPT.

Teaching and Learning Economics with the AIs

Tyler and I have a new paper, How to Learn and Teach Economics with Large Language Models, Including GPT:

GPTs, such as ChatGPT and Bing Chat, are capable of answering economics questions, solving specific economic models, creating exams, assisting with research, generating ideas, and enhancing writing, among other tasks. This paper highlights how these innovative tools differ from prior software and necessitate novel methods of interaction. By providing examples, tips, and guidance, we aim to optimize the use of GPTs and LLMs for learning and teaching economics effectively.

Most of the paper is about how to use GPTs effectively but we also make some substantive points that many people are missing:

GPTs are not simply a chatty front end to the internet. Some GPTs like ChatGPT have no ability to search the internet. Others, like Bing Chat, can search the internet and might do so to aid in answering a question, but that is not fundamentally how they work. It is possible to ask a GPT questions that no one has ever asked before. For example, we asked how Fred Flintstone was like Hamlet, and ChatGPT responded (in part):

Fred Flintstone and Hamlet are two vastly different characters from different time periods, cultures, and mediums of storytelling. It is difficult to draw direct comparisons between the two.

However, one possible point of similarity is that both characters face existential dilemmas and struggles with their sense of purpose and identity. Hamlet is plagued by doubts about his ability to avenge his father’s murder, and his own worthiness as a human being. Similarly, Fred Flintstone often grapples with his place in society and his ability to provide for his family and live up to his own expectations.

Not a bad answer for a silly question and one that (as far as we can tell) cannot be found on the internet.

GPTs have “read” or “absorbed” a great amount of text but that text isn’t stored in a database; instead the text was used to weight the billions of parameters in the neural net. It is thus possible to run a GPT on a powerful home computer. It would be very slow, since computing each word requires billions of calculations, but unlike storing the internet on your home computer, it is feasible to run a GPT on a home computer or even (fairly soon) on a mobile device.

GPTs work by predicting the next word in a sequence. If you hear the phrase “the Star-Spangled”, for example, you and a GPT might predict that the word “Banner” is likely to come next. This is what GPTs are doing but it would be a mistake to conclude that GPTs are simply “autocompletes” or even autocompletes on steroids.

Autocompletes are primarily statistical guesses based on previously asked questions. GPTs in contrast have some understanding (recall the as if modifier) of the meaning of words. Thus GPTs understand that Red, Green, and Blue are related concepts that King, Queen, Man and Woman are related in a specific way such that a woman cannot be a King. It also understands that fast and slow are related concepts, such that a car cannot be going fast and slow at the same time but can be fast and red and so forth. Thus GPTs are able to “autocomplete” sentences which have never been written before, as we described earlier.2 More generally, it seems likely that GPTs are building internal models to help them predict the next word in a sentence (e.g. Li et al. 2023).

The paper is a work in progress so comments are welcome.

Evading your local monopsonist

By Matthew E. Kahn and Joseph Tracy:

Over the last thirty years, there has been a rise in several empirical measures of local labor market monopsony power. The monopsonist has a profit incentive to offer lower wages to local workers. Mobile high skill workers can avoid the lower monopsony wages by moving to other more competitive local labor markets featuring a higher skill price vector. We develop a Roy Model of heterogeneous worker sorting across local labor markets that has several empirical implications. Monopsony markets are predicted to experience a “brain drain” over time. Using data over four decades we document this deskilling associated with local monopsony power. This means that observed cross-sectional wage gaps in monopsony markets partially reflects sorting on worker ability. The rise of work from home may act as a substitute for high-skill worker migration from monopsony markets.

Here is the full NBER working paper. Many university faculty of course are subject to monopsony power…

UK to Adopt Pharmaceutical Reciprocity!

More than twenty years ago I wrote:

If the United States and, say, Great Britain had drug-approval reciprocity, then drugs approved in Britain would gain immediate approval in the United States, and drugs approved in the United States would gain immediate approval in Great Britain. Some countries such as Australia and New Zealand already take into account U.S. approvals when making their own approval decisions. The U.S. government should establish reciprocity with countries that have a proven record of approving safe drugs—including most west European countries, Canada, Japan, and Australia. Such an arrangement would reduce delay and eliminate duplication and wasted resources. By relieving itself of having to review drugs already approved in partner countries, the FDA could review and investigate NDAs more quickly and thoroughly.

Well, it’s happening! After Brexit, there were concerns that drugs would take longer to get approved in the UK because the EU was a much larger market. To address this, the UK introduced the “reliance procedure” which recognized the EU as a stringent regulator and guaranteed approval in the UK within 67 days for any drug approved in the EU. The Reliance Procedure essentially kept the UK in the pre-Brexit situation, and was supposed to be temporary. However, recognizing the logic of recognizing the EU, the UK is now saying that it will recognize other countries.

Our aim is to extend the countries whose assessments we will take account of, increasing routes to market in the UK. We will communicate who these additional regulators are and publish detailed guidance about this new framework in due course, including any transition arrangements for applications received under existing frameworks.

The UK is already participating in a mutual recognition agreement with the FDA over some cancer drugs. Therefore, it seems likely that the FDA will be among the regulatory authorities that the UK recognizes. If the UK does recognize the FDA, then we only need the FDA to recognize the UK for my scenario from more than 20 years ago to be fulfilled.

It’s thus time to revisit the Lee-Cruz bill of 2015, which proposed the Result Act (I was an influence).

Reciprocity Ensures Streamlined Use of Lifesaving Treatments Act (S. 2388), or the RESULT Act,” which would amend the Food, Drug and Cosmetic Act to allow for reciprocal approval of drugs.

Addendum: Many previous posts on FDA reciprocity.

Why Matt Yglesias should be a classical liberal

Matt recently wrote a (gated) piece arguing that we should raise American taxes and increase the American welfare state. I never understand how this squares with this desire to reach one billion Americans in the not too distant future. To be clear, I also favor a much larger population.

If you ever have done hiring, and I believe Matt has at Vox, you will understand that so, so often selection is more important than ex post incentives. That is, you need to get the right people into your firm, start-up, media venture, non-profit, or whatever. And the right people can be very hard to find and attract, as I think Matt also has noted.

Now, on which basis do you wish to select people arriving into your country? Do you wish to offer them a lower-risk, more secure, more egalitarian, less upside option? Or do you want to reward ambition to a disproportionate degree? Don’t forget you are building up the home base for most of the world’s TFP!

To me it is obvious that you should prefer the structure of rewards that attracts the harder-working, more ambitious people. You want to send out the inegalitarian bat signal.

Of course, if America is headed toward one billion people (or even much less) in the foreseeable future, most of the country will end up being relatively recent immigrants and their descendants. So the selection of those immigrants really is of vital importance. The more open is immigration, the more important it is to have the right incentives for selection and to be sending out the right bat signal. Furthermore, the less immigration selects on formal credentials and a points system, the more important it is to attract the properly ambitious by setting the right incentives and the right bat signal in place.

Oddly, it is anti-immigration conservatives who should be more complacent about increasing welfare spending. If few people are entering, and if you require formal credentials to enter and stay, selection problems will be correspondingly smaller.

And that is why Matt should be a classical liberal. We are rebuilding the nation all the time, most of all if we listen to Matt Yglesias.