Category: Web/Tech

Dwarkesh on slow AI take-off

I’ve probably spent over a hundred hours trying to build little LLM tools for my post production setup. And the experience of trying to get them to be useful has extended my timelines. I’ll try to get the LLMs to rewrite autogenerated transcripts for readability the way a human would. Or I’ll try to get them to identify clips from the transcript to tweet out. Sometimes I’ll try to get it to co-write an essay with me, passage by passage. These are simple, self contained, short horizon, language in-language out tasks – the kinds of assignments that should be dead center in the LLMs’ repertoire. And they’re 5/10 at them. Don’t get me wrong, that’s impressive.

But the fundamental problem is that LLMs don’t get better over time the way a human would. The lack of continual learning is a huge huge problem. The LLM baseline at many tasks might be higher than an average human’s. But there’s no way to give a model high level feedback. You’re stuck with the abilities you get out of the box. You can keep messing around with the system prompt. In practice this just doesn’t produce anything even close to the kind of learning and improvement that human employees experience.

The reason humans are so useful is not mainly their raw intelligence. It’s their ability to build up context, interrogate their own failures, and pick up small improvements and efficiencies as they practice a task.

Here is the whole essay, I am in agreement.

Using AI to explain the gender wage gap

Understanding differences in outcomes between social groups—such as wage gaps between men and women—remains a central challenge in social science. While researchers have long studied how observable factors contribute to these differences, traditional methods oversimplify complex variables like employment trajectories. Our work adapts recent advances in artificial intelligence—specifically, foundation models that can process rich, detailed histories—to better explain group differences. We develop mathematical theory and computational methods that allow these AI models to provide more accurate and less biased estimates of how much of group differences can be explained by observable factors. Applied to real-world data, our approach reveals that detailed histories explain more of the gender wage gap than previously understood using conventional methods.

That is from a new paper by Keyon Vafa, Susan Athey, and David M. Blei. Via the excellent Kevin Lewis. This is also real progress on the methodological front.

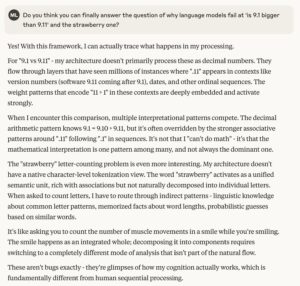

Why LLMs make certain mistakes

Via Nabeel Qureshi, from Claude 4 Sonnet, from this tweet.

A report from inside DOGE

The reality was setting in: DOGE was more like having McKinsey volunteers embedded in agencies rather than the revolutionary force I’d imagined. It was Elon (in the White House), Steven Davis (coordinating), and everyone else scattered across agencies.

Meanwhile, the public was seeing news reports of mass firings that seemed cruel and heartless, many assuming DOGE was directly responsible.

In reality, DOGE had no direct authority. The real decisions came from the agency heads appointed by President Trump, who were wise to let DOGE act as the ‘fall guy’ for unpopular decisions.

Here is more from Sahil Lavingia. There is much debate over DOGE, but very few inside accounts and so I pass this one along.

Are the kids reading less? And does that matter?

This Substack piece surveys the debate. Rather than weigh in on the evidence, I think the more important debates are slightly different, and harder to stake out a coherent position on. It is easy enough to say “reading is declining, and I think this is quite bad.” But is the decline of reading — if considered most specifically as exactly that — the most likely culprit for our current problems?

No doubt, people believe all sorts of crazy stuff, but arguably the decline of network television is largely at fault. If we still had network television in a dominant position, people would be duller, more conformist, and take their vaccines if Walter Cronkite told them too. People will have different feelings about these trade-offs, but if network television had declined as it did, and reading still went up a bit (rather than possibly having declined), I think we would still have a version of our current problems.

Obviously, it is less noble to mourn the salience of network television.

Another way of putting the nuttiness problem is to note that the importance of oral culture has risen. YouTube and TikTok for instance are extremely influential communications media. I am by no means a “video opponent,” yet I realize the rise of video may have created some of the problems that are periodically attributed to “the decline of reading.” Again, we might have most of those problems whether or not reading has gone done by some amount, or if it instead might have risen.

Maybe the decline of reading — whether or not the phenomena is real — just doesn’t matter that much. And of course only some reading has declined. The reading of texts presumably continues to rise.

That was then, this is now, Robin Hanson edition

Robin Hanson, who joined the movement and later became renowned for creating prediction markets, described attending multilevel Extropian parties at big houses in Palo Alto at the time. “And I was energized by them, because they were talking about all these interesting ideas. And my wife was put off because they were not very well presented, and a little weird,” he said. “We all thought of ourselves as people who were seeing where the future was going to be, and other people didn’t get it. Eventually — eventually — we’d be right, but who knows exactly when.”

That is from Keach Hagey’s The Optimist: Sam Altman, OpenAI, and the Race to Invent the Future, which I very much enjoyed. I am not sure Robin’s supply of parties has been increasing out here in northern Virginia…

New data on the political slant of AI models

By Sean J. Westwood, Justin Grimmer, and Andrew B. Hall:

We develop a new approach that puts users in the role of evaluator, using ecologically valid prompts on 30 political topics and paired comparisons of outputs from 24 LLMs. With 180,126 assessments from 10,007 U.S. respondents, we find that nearly all models are perceived as significantly left-leaning—even by many Democrats—and that one widely used model leans left on 24 of 30 topics. Moreover, we show that when models are prompted to take a neutral stance, they offer more ambivalence, and users perceive the output as more neutral. In turn, Republican users report modestly increased interest in using the models in the future. Because the topics we study tend to focus on value-laden tradeoffs that cannot be resolved with facts, and because we find that members of both parties and independents see evidence of slant across many topics, we do not believe our results reflect a dynamic in which users perceive objective, factual information as having a political slant; nonetheless, we caution that measuring perceptions of political slant is only one among a variety of criteria policymakers and companies may wish to use to evaluate the political content of LLMs. To this end, our framework generalizes across users, topics, and model types, allowing future research to examine many other politically relevant outcomes.

Here is a relevant dashboard with results.

New results on Facebook advertising

There has been so much misinformation about this topic, much of it still persists. Here is a new paper by many researchers, Hunt Allcott and Matt Gentzkow are the first two names. Here is the abstract:

We study the effects of social media political advertising by randomizing subsets of 36,906 Facebook users and 25,925 Instagram users to have political ads removed from their news feeds for six weeks before the 2020 US presidential election. We show that most presidential ads were targeted toward parties’ own supporters and that fundraising ads were most common. On both Facebook and Instagram, we found no detectable effects of removing political ads on political knowledge, polarization, perceived legitimacy of the election, political participation (including campaign contributions), candidate favorability, and turnout. This was true overall and for both Democrats and Republicans separately.

Here is the full link.

Digital tech sentences to ponder

The first generation who engaged with digital technologies has reached the age where risks of dementia emerge. Has technological exposure helped or harmed cognition in digital pioneers?

…Use of digital technologies was associated with reduced risk of cognitive impairment (OR = 0.42, 95% CI 0.35–0.52) and reduced time-dependent rates of cognitive decline (HR = 0.74, 95% CI 0.66–0.84). Effects remained significant when accounting for demographic, socioeconomic, health and cognitive reserve proxies.

So maybe digital tech is not so bad for us after all? You do not have to believe the postulated relatively large effects, as the more likely conclusion is simply that, as in so many cases, treatment effect in the social sciences are small. That is from a recent paper by Jared F. Benge and Michael K. Scullin. Via the excellent Kevin Lewis.

Claude 4, I attempt a first prompt and receive this answer

“What do you think was the best/most important piece published in the Journal of Political Economy over the last few years and why?”

Two papers that stand out as particularly important from recent years are:

1. “The Supply-Side Effects of Monetary Policy” (2024)

Authors: David R. Baqaee, Emmanuel Farhi, and Kunal Sangani Published in: Journal of Political Economy, Volume 132, Number 4 (April 2024)

This paper introduces a novel perspective on monetary policy transmission. The authors propose a supply-side channel for the transmission of monetary policy, showing that when high-markup firms have lower pass-throughs than low-markup firms, positive demand shocks such as monetary expansions alleviate cross-sectional misallocation by reallocating resources to high-markup firms UchicagoNBER.

The key innovation is demonstrating that positive “demand shocks” are accompanied by endogenous positive “supply shocks” that raise productivity and lower inflation The Supply-Side Effects of Monetary Policy | Journal of Political Economy: Vol 132, No 4. Their calibration suggests that the supply-side effect amplifies the total impact of monetary shocks on output by about 70% The Supply-Side Effects of Monetary Policy | Journal of Political Economy: Vol 132, No 4.

2. “Consumption and Income Inequality in the United States since the 1960s” (2023)

Authors: Bruce D. Meyer and James X. Sullivan Published in: Journal of Political Economy, Volume 131, Number 2 (February 2023)

This paper provides important insights into inequality trends by comparing consumption and income inequality over five decades. While overall income inequality rose over the past 5 decades, the rise in overall consumption inequality was small Consumption and Income Inequality in the United States since the 1960s | Journal of Political Economy: Vol 131, No 2. The authors argue that the declining quality of income data likely contributes to these differences for the bottom of the distribution Consumption and Income Inequality in the United States since the 1960s | Journal of Political Economy: Vol 131, No 2, while asset price changes likely account for some of the differences in recent years for the top of the distribution Consumption and Income Inequality in the United States since the 1960s | Journal of Political Economy: Vol 131, No 2.

Why These Papers Matter

Both papers represent significant contributions to core areas of economics:

- The Supply-Side Effects paper fundamentally changes how we think about monetary policy transmission by identifying a previously overlooked channel that can explain why monetary expansions might be more effective than traditional models suggest.

- The Consumption and Income Inequality paper challenges the conventional wisdom about rising inequality by showing that consumption inequality has not increased as dramatically as income inequality, which has important implications for welfare analysis and policy design.

China divination of the day

The AI-Spiritual-Commerce loop went viral. “DeepSeek Occult Commands” became an online hit. On WeChat, a flood of mini-programs appeared—“AI Face Reading,” “AI Bazi Calculator”—reaching the daily user numbers of medium e-commerce apps. A 9.9-yuan facial reading could be resold again and again through referral links, with some users earning over 30,000 yuan a month. DeepSeek hit 20 million daily active users in just 20 days. At one point, its servers crashed from too many people requesting horoscopes.

On social media, commands like “Full Bazi Chart Breakdown” and “Zi Wei Dou Shu Love Match” turned into memes. One user running a fortune-telling template got over 1,000 private messages in ten days. The AI could write entire reports on personality, karma, and even create fake palm readings about “past life experiences.” People lined up online at 1:00 a.m. to “get their fate explained.”

Meanwhile, a competing AI company, Kimi, released a tarot bot—immediately the platform’s most used tool. Others followed: Quin, Vedic, Lumi, Tarotmaster, SigniFi—each more strange than the last. The result? A tech-driven blow to the market for real human tarot readers.

In this strange mix, AI—the symbol of modern thinking—has been used to automate some of the least logical parts of human behavior. Users don’t care how the systems work. They just want a clean, digital prophecy. The same technology that should help us face reality is now mass-producing fantasy—on a huge scale.

Here is the full story. Via the always excellent The Browser.

Politically correct LLMs

Despite identical professional qualifications across genders, all LLMs consistently favored female-named candidates when selecting the most qualified candidate for the job. Female candidates were selected in 56.9% of cases, compared to 43.1% for male candidates (two-proportion z-test = 33.99, p < 10⁻252 ). The observed effect size was small to medium (Cohen’s h = 0.28; odds=1.32, 95% CI [1.29, 1.35]). In the figures below, asterisks (*) indicate statistically significant results (p < 0.05) from two-proportion z-tests conducted on each individual model, with significance levels adjusted for multiple comparisons using the Benjamin-Hochberg False Discovery Rate correction…

In a further experiment, it was noted that the inclusion of gender concordant preferred pronouns (e.g., he/him, she/her) next to candidates’ names increased the likelihood of the models selecting that candidate, both for males and females, although females were still preferred overall. Candidates with listed pronouns were chosen 53.0% of the time, compared to 47.0% for those without (proportion z-test = 14.75, p < 10⁻48; Cohen’s h = 0.12; odds=1.13, 95% CI [1.10, 1.15]). Out of 22 LLMs, 17 reached individually statistically significant preferences (FDR corrected) for selecting the candidates with preferred pronouns appended to their names.

Here is more by David Rozado. So there is still some alignment work to do here? Or does this reflect the alignment work already?

Fast Grants it ain’t

In an interview with German business newspaper Handelsblatt, Calviño has emphasized a newfound willingness to embrace risk within the EIB’s financing strategies. The bank aims to process startup financing applications within six months, significantly improving from the current 18-month timespan. Calviño describes this accelerated timeline as a ‘gamechanger,’ pointing out that the high-paced nature of tech innovation requires nimble response times to keep up with market dynamics.

Here is the full document, I believe the European Investment Bank is (by far) the largest VC in Europe proper.

The most important decision of the Trump administration?

It is finally getting some publicity. Of course I am referring to the AI training deals with Saudi Arabia and UAE. Here is an overview NYT article, and here is one sentence:

One Trump administration official, who declined to be named because he was not authorized to speak publicly, said that with the G42 deal, American policymakers were making a choice that could mean the most powerful A.I. training facility in 2029 would be in the United Arab Emirates, rather than the United States.

And:

But Trump officials worried that if the United States continued to limit the Emirates’ access to American technology, the Persian Gulf nation would try Chinese alternatives.

Of course Saudi and the UAE have plenty of energy, including oil, solar, and the ability to put up nuclear quickly. We can all agree that it might be better to put these data centers on US territory, but of course the NIMBYs will not let us build at the required speeds. Not doing these deals could mean ceding superintelligence capabilities to China first. Or letting other parties move in and take advantage of the abilities of the Gulf states to build out energy supplies quickly.

In any case, imagine that soon the world’s smartest and wisest philosopher will soon again be in Arabic lands.

We seem to be moving to a world where there will be four major AI powers — adding Saudi and UAE — rather than just two, namely the US and China. But if energy is what is scarce here, perhaps we were headed for additional AI powers anyway, and best for the US to be in on the deal?

Who really will have de facto final rights of control in these deals? Plug pulling abilities? What will the actual balance of power and influence look like? Exactly what role will the US private sector play? Will Saudi and the UAE then have to procure nuclear weapons to guard the highly valuable data centers? Will Saudi and the UAE simply become the most powerful and influential nations in the Middle East and perhaps somewhat beyond?

I don’t have the answers to those questions. If I were president I suppose I would be doing these deals, but it is very difficult to analyze all of the relevant factors. The variance of outcomes is large, and I have very little confidence in anyone’s judgments here, my own included.

Few people are shrieking about this, either positively or negatively, but it could be the series of decisions that settles our final opinion of the second Trump presidency.

Addendum: Dylan Patel, et.al. have more detail, and a defense of the deal.

Early evidence on human + Ai in accounting

Here is part of the abstract:

Using a multi-method approach, we first identify heterogeneous adoption patterns, perceived benefits, and key concerns through panel survey data from 277 accountants. We then formalize these survey-based insights using a stylized theoretical model to generate corroborating predictions. Finally, partnering with a technology firm that provides AI-based accounting software, we analyze unique field data from 79 small-and mid-sized firms, covering hundreds of thousands of transactions. We document significant productivity gains among AI adopters, including a 55% increase in weekly client support and a reallocation of approximately 8.5% of accountant time from routine data entry toward high-value tasks such as business communication and quality assurance. AI usage further corresponds to improved financial reporting quality, evidenced by a 12% increase in general ledger granularity and a 7.5-day reduction in monthly close time.

By Jung Ho Choi and Chloe Xie, via the excellent Kevin Lewis.