Category: Web/Tech

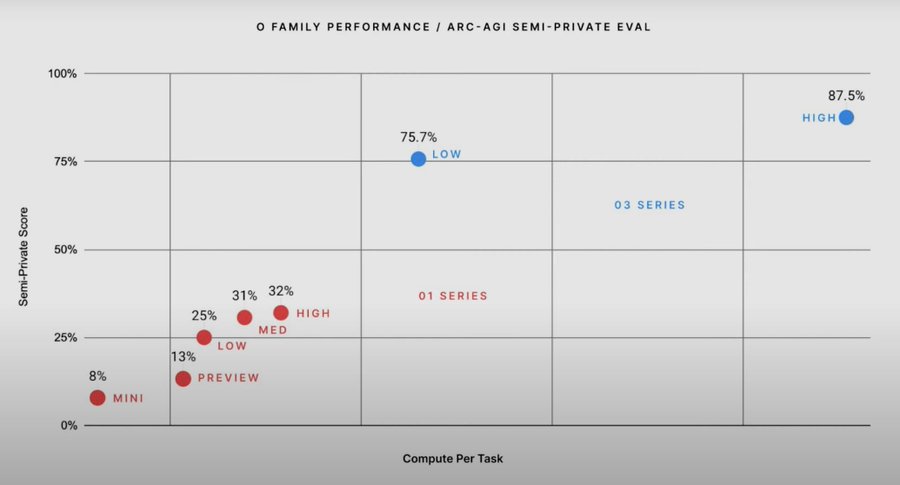

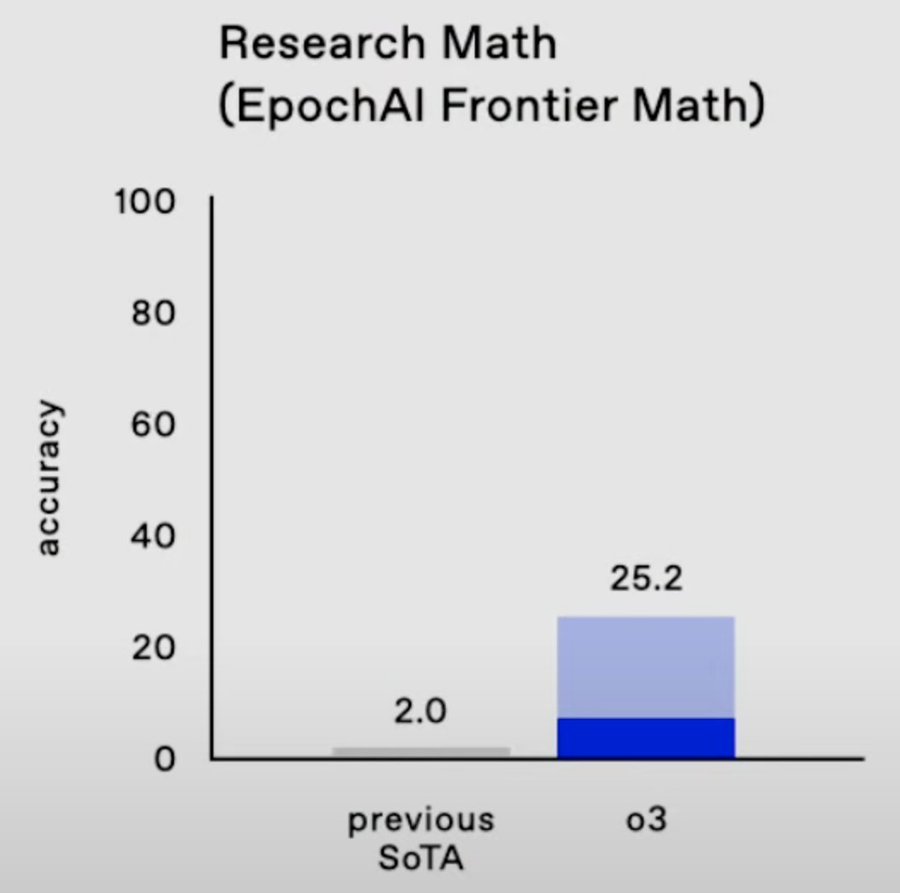

The new o3 model from OpenAI

Some more results. And this:

Yupsie-dupsie, delivery of this:

Happy holidays people, hope you are enjoying the presents!

Scott Alexander on chips (from the comments)

Why you should be talking with gpt about philosophy

I’ve talked with Gpt (as I like to call it) about Putnam and Quine on conceptual schemes. I’ve talked with it about Ζeno’s paradoxes. I’ve talked with it about behaviourism, causality, skepticism, supervenience, knowledge, humour, catastrophic moral horror, the container theory of time, and the relation between different conceptions of modes and tropes. I tried to get it to persuade me to become an aesthetic expressivist. I got it to pretend to be P.F. Strawson answering my objections to Freedom and Resentment. I had a long chat with it about the distinction between the good and the right.

…And my conclusion is that it’s now really good at philosophy…Gpt could easily get a PhD on any philosophical topic. More than that, I’ve had many philosophical discussions with professional philosophers that were much less philosophical than my recent chats with Gpt.

Here is the full Rebecca Lowe Substack on the topic. There are also instructions for how to do this well, namely talk with Gpt about philosophical issues, including ethics:

In many ways, the best conversation I’ve had with Gpt, so far, involved Gpt arguing against itself and its conception of me, as both Nozick1 (the Robert Nozick who sadly died in 2002) and Nozick2 (the imaginary Robert Nozick who is still alive today, and who according to Gpt has developed into a hardcore democrat), on the topic of catastrophic moral horror.

And as many like to say, this is the worst it ever will be…

Thomas Storrs on elastic data supply (from my email)

Regarding your post yesterday Are LLMs running out of data?, the National Archives has 13.5 billion pieces of paper and only 240 million are digitized. Some of this is classified or otherwise restricted, but surely we can do better than less than 2%.

NARA says they aim to increase this to 500 million by the end of 2026. Even with an overly generous $1/record estimate, it makes sense to me for someone to digitize much of the remaining 13 billion though the incentives are tricky for private actors. Perhaps a consortium of AI companies could make it work. It’s a pure public good so I would be happy with a federal appropriation.

Admittedly, I have a parochial interest in specific parts’ being digitized on mid-20th century federal housing policy. Nonetheless, supply of data for AI is obviously elastic and there’s some delicious low-hanging fruit available.

The National Archives are probably the biggest untapped source of extant data. There are hundreds of billions of more pages around the world though.

*The Nvidia Way*

I quite liked this new book by Tae Kim, offering a 245 pp. history of the company. Here is a useful review from the WSJ.

I can note that recently, a bit before Thanksgiving, I had the chance to visit Nvidia headquarters in Santa Clara, receive a tour, see some demos, and (a few days earlier) chat with Jensen Huang. I am pleased to report very positive impressions all around. My intuitive sense also jives with the portrait painted in this book.

As for my impressions of Nvidia, I was struck by the prevalence of large, attractive plant displays in the headquarters, and also how much care they take to ensure quietness on the main corporate floors and spaces (I notice funny things about companies). The geometric shapes and designs, for whatever reason, reminded me of the early 1970s movie Silent Running. If I visit an AI company, in the hallways many people will recognize me. At Nvidia nobody did, except those who invited me in. That too is an interesting contrast.

I am honored to have seen their lovely facilities.

Kevin Bryan and Joshua Gans have a new AI educational project

Just wanted to ping you about a tool Joshua Gans and I launched publicly today after a year of trials at universities all over the world (and just a stupid amount of work!) which I think is up your alley.

Idea is simple: AI should be 1) personalized to the student, 2) personalized to the professor’s content, and 3) structured to improve rather than degrade learning. In a perfect world, we want every student to have individual-level assistance, at any time, in any language, in the format they want (a chatbot TA, a question bank, a sample test grader, etc.). We want all assignments to be adaptive “mastery learning”. We want the professor to have insight on a weekly basis into how students are doing, and even into topics they may have taught in a somewhat confusing way. And we want to do this basically for free.

Right now, we have either raw GPT or Claude accomplishing 1 but not 2 or 3 (and some evidence it degrades learning for some students), or we have classes big enough to build custom AI-driven classes (like Khan Academy for basic algebra). For the thousands of classes where the professor’s teaching is idiosyncratic, the latter set of tools is basically “give the students a random textbook off the library shelf on the topic and have them study it” – not at all what I want my students to do!

We set up a team including proper UX designers and backend devs and built this guy here: https://www.alldayta.com/. It’s drag-and-drop for your course audio/video, slides, handouts, etc., preprocesses everything is a much deeper raw than raw OCR or LLMs, then preps a set of tools. Right now, there is a student-facing “virtual TA” and an autosummary weekly of where students are having trouble with the rest rolling out once we’re convinced the beta version is high enough quality. In my classes, I’ve had up to 10000 interactions in a term with this, and we ran trials at [redacted]. And we can do it at like a buck or two a student across a term, spun up in like 30 minutes of professor time for smaller courses.

There’s a free trial anyone can just sign up for; if your colleagues or the MR crowd would be interested, definitely send it along. I put a Twitter thread up about it as well with some examples of where we are going and why we think this is where higher ed is headed: https://x.com/Afinetheorem/status/1867632900956365307

Midnight regulations on chip access

Let us hope the Biden administration does not do too much damage on its way out the door (WSJ):

The U.S. is preparing rules that would restrict the sale of advanced artificial-intelligence chips in certain parts of the world in an attempt to limit China’s ability to access them, according to people familiar with the matter.

The rules are aimed at China, but they threaten to create conflict between the U.S. and nations that may not want their purchases of chips micromanaged from Washington.

…The purchasing caps primarily apply to regions such as Southeast Asia and the Middle East, the people said. The rules cover cutting-edge processors known as GPUs, or graphic processing units, which are used to train and run large-scale AI models.

Should we not want to bring the UAE more firmly into the American orbit? Is there not a decent chance they will have the energy supply for AI that we are unwilling to build domestically? Might not these regulations, over time, encourage foreign nations to become part of the Chinese AI network? More generally, why should an outgoing administration be making what are potentially reversible foreign policy decisions for the next regime?

Ilya’s talk

Twenty-four minutes, sixteen minutes for the core talk, self-recommending.

A new paper on the economics of AI alignment

A principal wants to deploy an artificial intelligence (AI) system to perform some task. But the AI may be misaligned and pursue a conflicting objective. The principal cannot restrict its options or deliver punishments. Instead, the principal can (i) simulate the task in a testing environment and (ii) impose imperfect recall on the AI, obscuring whether the task being performed is real or part of a test. By committing to a testing mechanism, the principal can screen the misaligned AI during testing and discipline its behaviour in deployment. Increasing the number of tests allows the principal to screen or discipline arbitrarily well. The screening effect is preserved even if the principal cannot commit or if the agent observes information partially revealing the nature of the task. Without commitment, imperfect recall is necessary for testing to be helpful.

That is by Eric Olav Chen, Alexis Ghersengorin, and Sami Petersen. And here is a tweet storm on the paper. I am very glad to see the idea of an optimal principal-agent contract brought more closely into AI alignment discussions. As you can see, it tends to make successful alignment more likely.

Austin Vernon on drones (from my email)

The offensive vs. defensive framing seems wrong, at least temporarily. It should be motivated vs. unmotivated, with drones favoring the motivated.

A competent drone capability requires building a supply chain, setting up a small manufacturing/assembly operation, and training skilled operators. They need to manage frequencies and adjust to jamming. Tight integration of these functions is a necessity. That favors highly motivated groups with broad popularity (recruiting skilled talent!) even if they are nominally weak.

Conversely, it can be challenging for overly corrupt or complacent organizations to counter. They are also more likely to fracture and lose cohesion when under attack.

We’ve seen HTS, Burmese rebels, and Azerbaijan all have a lot of success with drones. Ukraine went from hopelessly behind in drone tech to leading Russia in innovation in many niches.

It seems reasonable that the barriers to entry for a motivated drone “startup” will go up. The US military has effective, expensive interceptors like Coyote Block II to counter small attacks in locations like Syria. Fighting larger entities requires pretty absurd scaling to match enemy numbers and the low per-flight success rate – Ukraine claims they might produce millions of drones this year. Hamas had initial success attacking Israel on Oct. 7 but didn’t have the magazine depth to defend themselves.

AI targeting, the necessity of specialized components to defeat electronic warfare, and cheaper drone interceptors are all factors that could upset this balance. Entities that have the scale to deploy an AI stack, true factories, and specialized components should gain the advantage if the rate of change slows.

How to read a book using o1

You don’t have to upload any book into the system. The Great Cosmic Mind is smarter than most of the books you could jam into the context window. Just start asking questions. The core intuition is simply that you should be asking more questions. And now you have someone/something to ask!

I was reading a book on Indian history, and the author reference the Morley reforms of 1909. I did not know what those were, and so I posed a question and received a very good answer, read those here. I simply asked “What were the Morley reforms done by the British in India in 1909?”

Then I asked “did those apply to all parts of India?”

You can just keep on going. I’ll say it again: “The core intuition is simply that you should be asking more questions.”

Most people still have not yet internalized this emotionally. This is one of the biggest revolutions in reading, ever. And at some point people will write with an eye toward facilitating this very kind of dialogue.

Before AI replaces you, it will improve you, Philippines edition

Bahala says each of his calls at Concentrix is monitored by an artificial intelligence (AI) program that checks his performance. He says his volume of calls has increased under the AI’s watch. At his previous call center job, without an AI program, he answered at most 30 calls per eight-hour shift. Now, he gets through that many before lunchtime. He gets help from an AI “co-pilot,” an assistant that pulls up caller information and makes suggestions in real time.

“The co-pilot is helpful,” he says. “But I have to please the AI. The average handling time for each call is 5 to 7 minutes. I can’t go beyond that.”

Here is more from Michael Beltran, via Fred Smalkin.

Info Finance has a Future!

Info finance is Vitalik Buterin’s term for combining things like prediction markets and news. Indeed, a prediction market like Polymarket is “a betting site for the participants and a news site for everyone else.”

Here’s an incredible instantiation of the idea from Packy McCormick. As I understand it, betting odds are drawn from Polymarket, context is provided by Perplexity and Grok, a script is written by ChatGPT and read by an AI using Packy’s voice and a video is produced by combining with some simple visuals. All automated.

What’s really impressive, however, is that it works. I learned something from the final product. I can see reading this like a newspaper.

Info finance has a future!

Addendum: See also my in-depth a16z crypto podcast (Apple, Spotify) talking with Kominers and Chokshi for more.

How badly do humans misjudge AIs?

We study how humans form expectations about the performance of artificial intelligence (AI) and consequences for AI adoption. Our main hypothesis is that people project human-relevant problem features onto AI. People then over-infer from AI failures on human-easy tasks, and from AI successes on human-difficult tasks. Lab experiments provide strong evidence for projection of human difficulty onto AI, predictably distorting subjects’ expectations. Resulting adoption can be sub-optimal, as failing human-easy tasks need not imply poor overall performance in the case of AI. A field experiment with an AI giving parenting advice shows evidence for projection of human textual similarity. Users strongly infer from answers that are equally uninformative but less humanly-similar to expected answers, significantly reducing trust and engagement. Results suggest AI “anthropomorphism” can backfire by increasing projection and de-aligning human expectations and AI performance.

That is from a new paper by Raphael Raux, job market candidate from Harvard. The piece is co-authored with Bnaya Dreyfuss.

AI-generated poetry is indistinguishable from human-written poetry and is rated more favorably

That is the title of a new paper in Nature, here is part of the abstract:

We conducted two experiments with non-expert poetry readers and found that participants performed below chance levels in identifying AI-generated poems (46.6% accuracy, χ2(1, N = 16,340) = 75.13, p < 0.0001). Notably, participants were more likely to judge AI-generated poems as human-authored than actual human-authored poems (χ2(2, N = 16,340) = 247.04, p < 0.0001). We found that AI-generated poems were rated more favorably in qualities such as rhythm and beauty, and that this contributed to their mistaken identification as human-authored. Our findings suggest that participants employed shared yet flawed heuristics to differentiate AI from human poetry: the simplicity of AI-generated poems may be easier for non-experts to understand, leading them to prefer AI-generated poetry and misinterpret the complexity of human poems as incoherence generated by AI.

By Brian Porter and Edouard Machery. I do not think that pointing out the poor quality of human taste much dents the import of this result.

From this post on chip export bans:

That seems to be the real Scott from the IP address and his knowledge of our conversation. Plus it sounds like Scott (apologies if it is not!).

I would say this: since I chatted with Scott I took a very instructive and positive trip to United Arab Emirates. I am very impressed by their plans to put serious energy power behind AI projects. If you think about it, they have a major presence in three significant energy sources: fossil fuels, solar (more to come), and nuclear (much more to come). They also are not so encumbered by NIMBY constraints, whereas some of the American nuclear efforts have in the meantime met with local and regional stumbling blocks. There really is plenty of empty desert there.

So I think America has a great chance to work with UAE on these issues. I do understand there are geopolitical and other risks to such a collaboration, but I think the risks from no collaboration are greater.

This short tale is a good example of the benefits of travel.

And if you can get to Abu Dhabi, I urge you to go. In addition to what I learned about AI, I very much enjoyed their branch of the Louvre, with its wonderful Greek statue and Kandinsky, among other works, not to mention the building itself. The Abrahamic Family House, on a plaza, has a lovely mix of mosque, church, and synagogue, the latter of course being politically brave and much needed in the Middle East. Here is Rasheed Griffith on Abu Dhabi.