What do I think of the NIMBY contrarianism piece?

You know, the one from yesterday suggesting that tighter building restrictions have not led to higher real estate prices, at least not in the cross-sectional data? My sense is that it could be true, but we should not overestimate its import. In most circumstances, economists should focus on output, not prices per se. Let’s say we can build more homes, and prices for homes in that area do not fall. That can be a good thing! It is a sign that the homes are of high value, and people have the means to pay for them. It can be a sign that the higher residential density has not boosted crime rates, and so on. I find some of the more left-leaning YIMBY arguments are a bit too focused on distribution. I am happy if more YIMBY leads to a more egalitarian distribution of incomes, but I do not necessarily expect that. Often it leads to more agglomeration and higher wages, and high real estate prices too. The higher output and greater freedom of choice still are good outcomes.

Tuesday assorted links

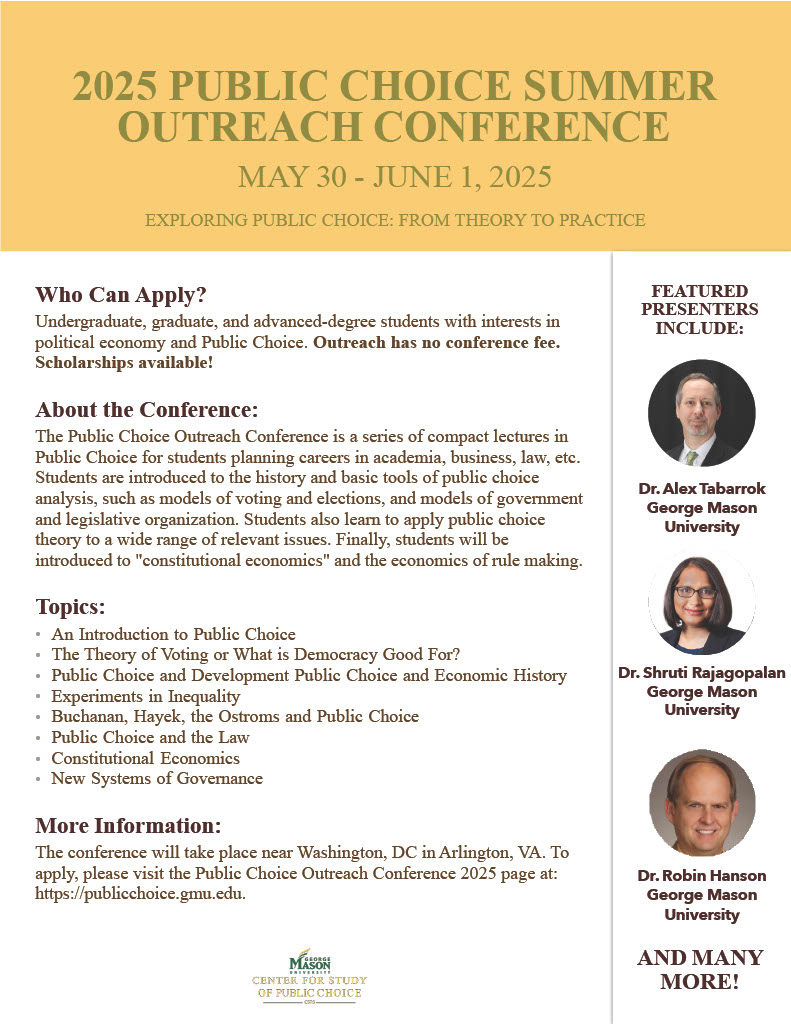

Public Choice Outreach Conference!

The annual Public Choice Outreach Conference is a crash course in public choice. The conference is designed for undergraduates and graduates in a wide variety of fields. It’s entirely free. Indeed scholarships are available! The conference will be held Friday May 30-Sunday June 1, 2025, near Washington, DC in Arlington, VA. Lots of great speakers. More details in the poster. Please encourage your students to apply.

The Anatomy of Marital Happiness

How can I not link to a new Sam Peltzman piece on such a topic? Here goes:

Since 1972, the General Social Survey has periodically asked whether people are happy with Yes, Maybe or No type answers. Here I use a net “happiness” measure, which is percentage Yes less percentage No with Maybe treated as zero. Average happiness is around +20 on this scale for all respondents from 1972 to the last pre-pandemic survey (2018). However, there is a wide gap of around 30 points between married and unmarried respondents. This “marital premium” is this paper’s subject. I describe how this premium varies across and within population groups. These include standard socio demographics (age, sex, race education, income) and more. I find little variety and thereby surface a notable regularity in US socio demography: there is a substantial marital premium for every group and subgroup I analyze, and this premium is usually close to the overall 30-point average. This holds not just for standard characteristics but also for those directly related to marriage like children and sex (and sex preference). I also find a “cohabitation premium”, but it is much smaller (10 points) than the marital premium. The analysis is mainly visual, and there is inevitably some interesting variety across seventeen figures, such as a 5-point increase in recent years.

Via the excellent, and married, Kevin Lewis.

The Institute for Museum and Library Services is going away

Last year the agency provided $266.7m in grants to libraries, museums and related institutions across the country and its territories. Those grants ranged widely in value and purpose, such as $343,521 to support an internship and fellowship programme at the Museo de Arte de Puerto Rico or $10,350 for the Art Museum of Eastern Idaho to develop new curricula for groups of visiting schoolchildren.

The Wilson Center at the Smithsonian will be gone, and:

The other agencies targeted for elimination in Trump’s executive order are the Federal Mediation and Conciliation Service, the United States Agency for Global Media (which operates the Voice of America media network), the United States Interagency Council on Homelessness, Community Development Financial Institutions Fund and the Minority Business Development Agency.

Here is the full story, I do not regard these as tragedies.

NIMBY contrarianism

The standard view of housing markets holds that the flexibility of local housing supply–shaped by factors like geography and regulation–strongly affects the response of house prices, house quantities and population to rising housing demand. However, from 2000 to 2020, we find that higher income growth predicts the same growth in house prices, housing quantity, and population regardless of a city’s estimated housing supply elasticity. We find the same pattern when we expand the sample to 1980 to 2020, use different elasticity measures, and when we instrument for local housing demand. Using a general demand-and-supply framework, we show that our findings imply that constrained housing supply is relatively unimportant in explaining differences in rising house prices among U.S. cities. These results challenge the prevailing view of local housing and labor markets and suggest that easing housing supply constraints may not yield the anticipated improvements in housing affordability.

That is from a new NBER working paper by Schuyler Louie, John A. Mondragon, and Johannes Wieland.

Monday assorted links

1. Does generative AI support the MAGA aesthetic style? (NYT)

2. Would you rather have married young?

3. Possible improvements in teen mental health?

4. LLMs produce funnier memes than the average human, though not as funny as the top humans.

5. On Becoming a Guinea Fowl is another good Zambian movie, here is the trailer.

What Did We Learn From Torturing Babies?

As late as the 1980s it was widely believed that babies do not feel pain. You might think that this was an absurd thing to believe given that babies cry and exhibit all the features of pain and pain avoidance. Yet, for much of the 19th and 20th centuries, the straightforward sensory evidence was dismissed as “pre-scientific” by the medical and scientific establishment. Babies were thought to be lower-evolved beings whose brains were not yet developed enough to feel pain, at least not in the way that older children and adults feel pain. Crying and pain avoidance were dismissed as simply reflexive. Indeed, babies were thought to be more like animals than reasoning beings and Descartes had told us that an animal’s cries were of no more import than the grinding of gears in a mechanical automata. There was very little evidence for this theory beyond some gesturing’s towards myelin sheathing. But anyone who doubted the theory was told that there was “no evidence” that babies feel pain (the conflation of no evidence with evidence of no effect).

Most disturbingly, the theory that babies don’t feel pain wasn’t just an error of science or philosophy—it shaped medical practice. It was routine for babies undergoing medical procedures to be medically paralyzed but not anesthetized. In one now infamous 1985 case an open heart operation was performed on a baby without any anesthesia (n.b. the link is hard reading). Parents were shocked when they discovered that this was standard practice. Publicity from the case and a key review paper in 1987 led the American Academy of Pediatrics to declare it unethical to operate on newborns without anesthesia.

In short, we tortured babies under the theory that they were not conscious of pain. What can we learn from this? One lesson is humility about consciousness. Consciousness and the capacity to suffer can exist in forms once assumed to be insensate. When assessing the consciousness of a newborn, an animal, or an intelligent machine, we should weigh observable and circumstantial evidence and not just abstract theory. If we must err, let us err on the side of compassion.

Claims that X cannot feel or think because Y should be met with skepticism—especially when X is screaming and telling you different. Theory may convince you that animals or AIs are not conscious but do you want to torture more babies? Be humble.

We should be especially humble when the beings in question are very different from ourselves. If we can be wrong about animals, if we can be wrong about other people, if we can be wrong about our own babies then we can be very wrong about AIs. The burden of proof should not fall on the suffering being to prove its pain; rather, the onus is on us to justify why we would ever withhold compassion.

Hat tip: Jim Ward for discussion.

Roko on AI risk

I could not get the emojis to reproduce in legible form, you can see them on the original tweet. Here goes:

The Less Wrong/Singularity/AI Risk movement started in the 2000s by Yudkowsky and others, which I was an early adherent to, is wrong about all of its core claims around AI risk. It’s important to recognize this and appropriately downgrade the credence we give to such claims moving forward.

Claim: Mindspace is vast, so it’s likely that AIs will be completely alien to us, and therefore dangerous!

Truth: Mindspace is vast, but we picked LLMs as the first viable AI paradigm because the abundance of human-generated data made LLMs the easiest choice. LLMs are models of human language, so they are actually not that alien.

Claim: AI won’t understand human values until it is superintelligent, so it will be impossible to align, because you can only align it when it is weak (but it won’t understand) and it will only understand when it is strong (but it will reject your alignment attempts).

Truth: LLMs learned human values before they became superhumanly competent.

Claim: Recursive self-improvement means that a single instance of a threshold-crossing seed AI could reprogram itself and undergo an intelligence explosion in minutes or hours. An AI made overnight in someone’s basement could develop a species-ending superweapon like nanotechnology from first principles and kill us all before we wake up in the morning.

Truth: All ML models have strongly diminishing returns to data and compute, typically logarithmic. Today’s rapid AI progress is only possible because the amount of money spent on AI is increasing exponentially. Superintelligence in a basement is information-theoretically impossible – there is no free lunch from recursion, the exponentially large data collection and compute still needs to happen.

Claim: You can’t align an AI because it will fake alignment during training and then be misaligned in deployment!

Truth: The reason machine learning works at all is because regularization methods/complexity penalties select functions that are the simplest generalizations of the training data, not the most perverse ones. Perverse generalizations do exist, but machine learning works precisely because we can reject them.

Claim: AI will be incorrigible, meaning that it will resist creators’ attempts to correct it if something is wrong with the specification. That means if we get anything wrong, the AI will fight us over it!

Truth: AIs based on neural nets might in some sense want to resist changes to their minds, but they can’t resist changes to their weights that happen via backpropagation. When AIs misbehave, developers use RLHF and gradient descent to change their minds – literally.

Claim: It will get harder and harder to align AIs as they become smarter, so even though things look OK now there will soon be a disaster as AIs outpace their human masters!

Truth: It probably is harder in an absolute sense to align a more powerful AI. But it’s also harder in an absolute sense to build it in the first place – the ratio of alignment difficulty to capabilities difficulty appears to be stable or downtrending, though more data is needed here. In absolute terms, AI companies spend far more resources on capabilities than on alignment because alignment is the relatively easy part of the problem. Eventually, most alignment work will be done by other AIs, just like a king outsources virtually all policing work to his own subjects

Claim: We can slow down AI development by holding conferences warning people about AI risk in the twenty-teens, which will delay the development of superintelligent AI so that we have more time to think about how to get things right

Truth: AI risk conferences in the twenty-teens accelerated the development of AI, directly leading to the creating of OpenAI and the LLM revolution. But that’s ok, because nobody was doing anything useful with the extra time that we might have had, so there was no point waiting.

Claim: We have to get decision theory and philosophy exactly right before we develop any AI at all or it will freeze half-formed or incorrect ideas forever, dooming us all.

Truth: ( … pending … )

Claim: It will be impossible to solve LLM jailbreaks! Adversarial ML is unsolvable! Superintelligent AIs will be jailbroken by special AI hackers who know the magic words, and they will be free to destroy the world just with a few clever prompts!

Truth: ( … pending …) ❔

Addendum: Teortaxes comments.

How well do humans understand dogs?

Dogs can’t talk, but their body language speaks volumes. Many dogs will bow when they want to play, for instance, or lick their lips and avert their gaze when nervous or afraid.

But people aren’t always good at interpreting such cues — or even noticing them, a new study suggests.

In the study, the researchers presented people with videos of a dog reacting to positive and negative stimuli, including a leash, a treat, a vacuum cleaner and a scolding. When asked to assess the dog’s emotions, viewers seemed to pay more attention to the situational cues than the dog’s actual behavior, even when the videos had been edited to be deliberately misleading. (In one video, for instance, a dog that appeared to be reacting to the sight of his leash had actually been shown a vacuum cleaner by his owner.)

Here is the full NYT piece by Emily Anthes. Here is the original research. How well do humans understand humans?

Sunday assorted links

Arctic Instincts? The Late Pleistocene Arctic Origins of East Asian Psychology

Highly speculative, but I found this of interest:

This article explores the hypothesis that modern East Asian populations inherited and maintained extensive psychosocial adaptations to arctic environments from ancestral Ancient Northern East Asian populations, which inhabited arctic and subarctic Northeast Eurasia around the Last Glacial Maximum period of the Late Pleistocene, prior to back migrating southwards into East Asia in the Holocene. I present the first cross-psychology comparison between modern East Asian and Inuit populations, using the latter as a model for paleolithic Arctic populations. The comparison reveals that both East Asians and the Inuit exhibit notably high emotional control/suppression, ingroup harmony/cohesion and subdomain unassertiveness, indirectness, self and social consciousness, reserve/introversion, cautiousness, and perseverance/endurance. The same traits have been identified by decades of research in polar psychology (i.e., psychological research on workers, expeditioners, and military personnel living and working in the Arctic and Antarctic) as being adaptive for, or byproducts of, life in polar environments. I interpret this as indirect evidence supporting my hypothesis that the proposed Arcticist traits in modern East Asian and Inuit populations primarily represent adaptations to arctic climates, specifically for the adaptive challenges of highly interdependent survival in an extremely dangerous, unpredictable, and isolated environment, with frequent prolonged close-quarters group confinement, and exacerbated consequences for social devaluation/exclusion/expulsion. The article concludes with a reexamination of previous theories on the roots of East Asian psychology, mainly that of rice farming and Confucianism, in the light of my Arcticism theory.

Here is the full paper by David Sun. Here is David’s related Substack.

Was our universe born inside a black hole?

Without a doubt, since its launch, the James Webb Space Telescope (JWST) has revolutionized our view of the early universe, but its new findings could put astronomers in a spin. In fact, it could tell us something profound about the birth of the universe by possibly hinting that everything we see around us is sealed within a black hole.

The $10 billion telescope, which began observing the cosmos in the Summer of 2022, has found that the vast majority of deep space and, thus the early galaxies it has so far observed, are rotating in the same direction. While around two-thirds of galaxies spin clockwise, the other third rotates counter-clockwise.

In a random universe, scientists would expect to find 50% of galaxies rotating one way, while the other 50% rotate the other way. This new research suggests there is a preferred direction for galactic rotation…

“It is still not clear what causes this to happen, but there are two primary possible explanations,” team leader Lior Shamir, associate professor of computer science at the Carl R. Ice College of Engineering, said in a statement. “One explanation is that the universe was born rotating. That explanation agrees with theories such as black hole cosmology, which postulates that the entire universe is the interior of a black hole.

“But if the universe was indeed born rotating, it means that the existing theories about the cosmos are incomplete.”

…This has another implication; each and every black hole in our universe could be the doorway to another “baby universe.” These universes would be unobservable to us because they are also behind an event horizon, a one-way light-trapping point of no return from which light cannot escape, meaning information can never travel from the interior of a black hole to an external observer.

Here is the full story. Solve for the Darwinian equilibrium! Of course Julian Gough has been pushing related ideas for some while now…

Have humans passed peak brain power?

In one particularly eye-opening statistic, the share of adults who are unable to “use mathematical reasoning when reviewing and evaluating the validity of statements” has climbed to 25 per cent on average in high-income countries, and 35 per cent in the US.

Saturday assorted links

1. System override.

3. Politicians have to learn how to use AI advisors.

4. Estimating local gdp everywhere.

5. What the Trump administration demands from Columbia University. And more from the NYT.

6. Daniel Kahmeman’s assisted suicide (WSJ).

7. Police officer steps in when alligator stops pizza delivery in Florida.

8. Outrage in Australia after an American woman grabs a baby wombat (NYT).