Category: Data Source

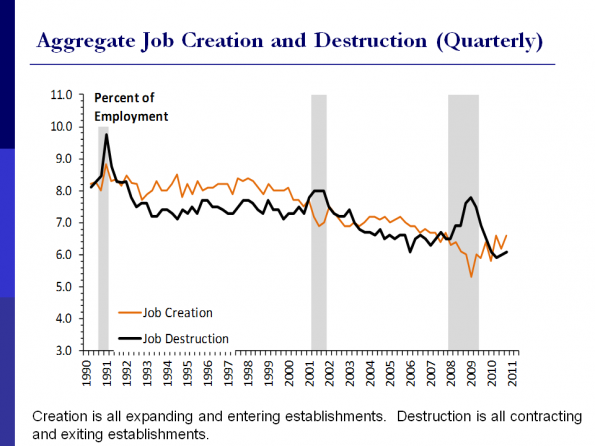

Aggregate job creation and destruction (quarterly), or is creative destruction slowing down?

Is the U.S. labor market becoming less dynamic? Here is much more (hat tip to Mark Thoma, but from John Haltiwanger, paper here, slides here), and an excerpt from the FT:

Yet there’s obviously a meaningful secular story as well, but it’s more complicated and, indeed, remains something of a mystery, as Haltiwanger can only posit a few educated guesses.

Among them are the increasing share of US employment moving to businesses six years or older; the shift to large-scale retail chains; the aging of the US labour force (and therefore less willingness to experiment); declining job creation rates for startups; economic uncertainty; policy uncertainty.

We are not as wealthy as we thought we were, installment #1637

In dollar terms, median household income is now $49,909, down $3,609 — or 6.7% — in the two years since the recession ended.

That is from Felix Salmon, read the rest of the post and see the graph. Further comment here.

Not a CLASS Act

When President Obama’s health care proposal was being debated we were repeatedly told that the “The president’s plan represents an important step toward long-term fiscal sustainability.” Indeed, a key turning point in the bill’s progress was when the CBO scored it as reducing the deficit by $130 billion over 10 years making the bill’s proponents positively giddy, as Peter Suderman put it at the time. Of course, many critics claimed that the cost savings were gimmicks but their objections were overruled.

One of the budget savings that the critics claimed was a gimmick was that a new long-term care insurance program, The Community Living Assistance Services and Supports program or CLASS for short, was counted as reducing the deficit. How can a spending program reduce the deficit? Well the enrollees had to pay in for at least five years before collecting benefits so over the first 10 years the program was estimated to reduce the deficit by some $70-80 billion. Indeed, these “savings” from the CLASS act were a big chunk of the 10-yr $130 billion in deficit reduction for the health care bill.

The critics of the plan, however, were quite wrong for it wasn’t a gimmick, it was a gimmick-squared, a phantom gimmick, a zombie gimmick:

They’re calling it the zombie in the budget.

It’s a long-term care plan the Obama administration has put on hold, fearing it could go bust if actually implemented. Yet while the program exists on paper, monthly premiums the government may never collect count as reducing federal deficits.

Real or not, that’s $80 billion over the next 10 years….

“It’s a gimmick that produces phantom savings,” said Robert Bixby, executive director of the Concord Coalition, a nonpartisan group that advocates deficit control..

“That money should have never been counted as deficit reduction because it was supposed to be set aside to pay for benefits,” Bixby added. “The fact that they’re not actually doing anything with the program sort of compounds the gimmick.”

Moreover, there were many people inside the administration who thought that the program could not possibly work and who said so at the time. Here is Rick Foster, Chief Actuary of HHS’ Centers for Medicare and Medicaid Services on an earlier (2009) draft of the proposal:

The program is intended to be “actuarially sound,” but at first glance this goal may be impossible. Due to the limited scope of the insurance coverage, the voluntary CLASS plan would probably not attract many participants other than individuals who already meet the criteria to qualify as beneficiaries. While the 5-year “vesting period” would allow the fund to accumulate a modest level of assets, all such assets could be used just to meet benefit payments due in the first few months of the 6th year. (italics added)

So we have phantom savings from a zombie program and many people knew at the time that the program was a recipe for disaster.

Now some people may argue that I am biased, that I am just another free market economist who doesn’t want to see a new government program implemented no matter what, but let me be clear, this isn’t CLASS warfare, this is math.

Hat tip: Andrew S.

Google and the Power of Positive Feedback

Just about everything Google does allows Google to do just about everything it does better. Here’s an example:

By 2007, Google knew enough about the structure of queries to be able to release a US-only directory inquiry service called GOOG-411. You dialled 1-800-4664-411 and spoke your question to the robot operator, which parsed it and spoke you back the top eight results, while offering to connect your call. It was free, nifty and widely used, especially because – unprecedentedly for a company that had never spent much on marketing – Google chose to promote it on billboards across California and New York State. People thought it was weird that Google was paying to advertise a product it couldn’t possibly make money from, but by then Google had become known for doing weird and pleasing things.

…What was it getting with GOOG-411? It soon became clear that what it was getting were demands for pizza spoken in every accent in the continental United States, along with questions about plumbers in Detroit and countless variations on the pronunciations of ‘Schenectady’, ‘Okefenokee’ and ‘Boca Raton’. GOOG-411, a Google researcher later wrote, was a phoneme-gathering operation, a way of improving voice recognition technology through massive data collection.

Three years later, the service was dropped, but by then Google had launched its Android operating system and had released into the wild an improved search-by-voice service that didn’t require a phone call. You tapped the little microphone icon on your phone’s screen – it was later extended to Blackberries and iPhones – and your speech was transmitted via the mobile internet to Google servers, where it was interpreted using the advanced techniques the GOOG-411 exercise had enabled. The baby had learned to talk.

…If, however, Google is able to deploy its newly capable voice recognition system to transcribe the spoken words in the two days’ worth of video uploaded to YouTube every minute, there would be an explosion in the amount of searchable material. Since there’s no reason Google can’t do it, it will.A thought experiment: if Google launched satellites into orbit it could record all terrestrial broadcasts and transcribe those too. That may sound exorbitant, but it’s not obviously crazier than some of the ideas that Google’s founders have dreamed up and found a way of implementing: the idea of photographing all the world’s streets, of scanning all the world’s books, of building cars that drive themselves. It’s the sort of thing that crosses Google’s mind.

More of interest.

TGS for anarchists

John Mauldin writes (pdf):

Few would argue that a healthy economy can grow without the private sector leading the way. The real per capita “Private Sector GDP” is another powerful measure that is easy to calculate. It nets out government spending—federal, state, and local. Very like our Structural GDP, Private Sector GDP is bottom-bouncing, 11% below the 2007 peak, 6% below the 2000–2003 plateau, and has reverted to roughly match 1998 levels. Figure 1 illustrates the situation. Absent debt-financed consumption, we have gone nowhere since the late 1990s.

There are some good diagrams at the link. For the pointer I thank Shiraz Allidina.

The excuses have run out

John Quiggin writes:

- Average US household size has been increasing since 2005 and is now back to the 1990 level

- Changes following the Boskin Commission report of 1996 have mostly accounted for product quality improvements and substitution effects

- The EITC was last changed in 2001, and the effects were modest. It seems likely to be cut as part of the current move to austerity

- Access to health insurance has generally declined. Obama’s reforms will change this if they survive to 2014, but that’s far from being a certainty

Work backwards to figure out the context.

U.S. fact of the day

From Justin Wolfers, based on the new census data:

Since 2007, real median household income has declined 6.4% and is 7.1 % below the median household income peak prior to the 2001 recession.

I am increasingly puzzled by those who think this is fundamentally a story of rising consumer surplus from the internet.

The Importance of Selection Effects

I love this example of the importance of selection effects:

During WWII, statistician Abraham Wald was asked to help the British decide where to add armor to their bombers. After analyzing the records, he recommended adding more armor to the places where there was no damage!

The RAF was initially confused. Can you explain?

You can find the answer in the extension or at the link.

Wald had data only on the planes that returned to Britain so the bullet holes that Wald saw were all in places where a plane could be hit and still survive. The planes that were shot down were probably hit in different places than those that returned so Wald recommended adding armor to the places where the surviving planes were lucky enough not to have been hit.

When did the U.S. labor market slow down?

Scott Winship, at his new Brookings gig, reports:

The bleak outlook for jobseekers has three immediate sources. The sharp deterioration beginning in early 2007 is the most dramatic feature of the above chart (the rise in job scarcity after point C in the chart, the steepness of which depends on the data source used). But two less obvious factors predated the recession. The first is the steepness of the rise in job scarcity during the previous recession in 2001 (from point A to point B), which rivaled that during the deep downturn of the early 1980s. The second is the failure between 2003 and 2007 of jobs per jobseeker to recover from the 2001 recession (the failure of point C to fall back to point A).

Unemployment increased during the 2001 recession, but it subsequently fell almost to its previous low (from point A to B and then back to C). In contrast, job openings plummeted—much more sharply than unemployment rose—and then failed to recover. In previous recoveries, openings eventually outnumbered job seekers (where a rising blue line crosses a falling green line), but during the last recovery a labor shortage never emerged.

…Whatever the causes, the evidence is clear that the health of labor markets were compromised well before the recession.

There is much more at the link, including some very good graphs.

How good is published academic research?

Bayer halts nearly two-thirds of its target-validation projects because in-house experimental findings fail to match up with published literature claims, finds a first-of-a-kind analysis on data irreproducibility.

An unspoken industry rule alleges that at least 50% of published studies from academic laboratories cannot be repeated in an industrial setting, wrote venture capitalist Bruce Booth in a recent blog post. A first-of-a-kind analysis of Bayer’s internal efforts to validate ‘new drug target’ claims now not only supports this view but suggests that 50% may be an underestimate; the company’s in-house experimental data do not match literature claims in 65% of target-validation projects, leading to project discontinuation.

“People take for granted what they see published,” says John Ioannidis, an expert on data reproducibility at Stanford University School of Medicine in California, USA. “But this and other studies are raising deep questions about whether we can really believe the literature, or whether we have to go back and do everything on our own.”

For the non-peer-reviewed analysis, Khusru Asadullah, Head of Target Discovery at Bayer, and his colleagues looked back at 67 target-validation projects, covering the majority of Bayer’s work in oncology, women’s health and cardiovascular medicine over the past 4 years. Of these, results from internal experiments matched up with the published findings in only 14 projects, but were highly inconsistent in 43 (in a further 10 projects, claims were rated as mostly reproducible, partially reproducible or not applicable; see article online here). “We came up with some shocking examples of discrepancies between published data and our own data,” says Asadullah. These included inabilities to reproduce: over-expression of certain genes in specific tumour types; and decreased cell proliferation via functional inhibition of a target using RNA interference.

The unspoken rule is that at least 50% of the studies published even in top tier academic journals – Science, Nature, Cell, PNAS, etc… – can’t be repeated with the same conclusions by an industrial lab. In particular, key animal models often don’t reproduce. This 50% failure rate isn’t a data free assertion: it’s backed up by dozens of experienced R&D professionals who’ve participated in the (re)testing of academic findings.

For the pointer I thank Michelle Dawson.

More evidence on the research impact of blogs

In a new paper, Otto Kassi and Tatu Westling write (pdf):

This study explores the short-run spillover e ffects of popular research papers. We consider the publicity of ‘Male Organ and Economic Growth: Does Size Matter?’ as an exogenous shock to economics discussion paper demand, a natural experiment of a sort. In particular, we analyze how the very substantial visibility influenced the downloads of Helsinki Center of Economic Research discussion papers. Di fference in diff erences and regression discontinuity analysis are conducted to elicit the spillover patterns. This study fi nds that the spillover eff ect to average economics paper demand is positive and statistically signi ficant. It seems that hit papers increase the exposure of previously less downloaded papers. We find that part of the spillover e ffect could be attributable to Internet search engines’ influence on browsing behavior. Conforming to expected patterns, papers residing on the same web page as the hit paper evidence very significant increases in downloads which also supports the spillover thesis.

U.S. fact of the day

The US has roughly the same number of jobs today as it had in 2000, but the population is well over 30,000,000 larger. To get to a civilian employment-to-population ratio equal to that in 2000, we would have to gain some 18 MILLION jobs.

Here is more. People will differ, of course, in terms of how much they see this as growing leisure or stagnation.

By the way, the current unemployment rate for those with a Bachelor’s degree or higher is about five percent. In which direction does that auxiliary fact push you?

Hat tip goes to Richard Harper on Twitter.

Make Loans, Not War

Bump it up to full screen for best viewing.

Intercontinental Ballistic Microfinance from Kiva Microfunds on Vimeo.

Why didn’t the stimulus create more jobs?

There are many studies of the stimulus, but finally there is one which goes behind the numbers to see what really happened. And it’s not an entirely pretty story. My colleagues Garett Jones and Daniel Rothschild conducted extensive field research (interviewing 85 organizations receiving stimulus funds, in five regions), asking simple questions such as whether the hired project workers already had had jobs. There are lots of relevant details in the paper but here is one punchline:

…hiring people from unemployment was more the exception than the rule in our interviews.

In a related paper by the same authors (read them both), here is more:

Hiring isn’t the same as net job creation. In our survey, just 42.1 percent of the workers hired at ARRA-receiving organizations after January 31, 2009, were unemployed at the time they were hired (Appendix C). More were hired directly from other organizations (47.3 percent of post-ARRA workers), while a handful came from school (6.5%) or from outside the labor force (4.1%)(Figure 2).

One major problem with ARRA was not the crowding out of financial capital but rather the crowding out of labor. In the first paper there is also a discussion of how the stimulus job numbers were generated, how unreliable they are, and how stimulus recipients sometimes had an incentive to claim job creation where none was present. Many of the created jobs involved hiring people back from retirement. You can tell a story about how hiring the already employed opened up other jobs for the unemployed, but it’s just that — a story. I don’t think it is what happened in most cases, rather firms ended up getting by with fewer workers.

There’s also evidence of government funds chasing after the same set of skilled and already busy firms. For at least a third of the surveyed firms receiving stimulus funds, their experience failed to fit important aspects of the Keynesian model.

This paper goes a long way toward explaining why fiscal stimulus usually doesn’t have such a great “bang for the buck.” It raises the question of whether as “twice as big” stimulus really would have been enough. Must it now be four times as big? The paper also sets a new standard for disaggregated data on this macro question, the data are in a zip file here.

More rooftop-ready results on reservation wages

These are from Alan Krueger and Andreas Muller (pdf):

This paper presents findings from a survey of 6,025 unemployed workers who were interviewed every week for up to 24 weeks in the fall of 2009 and spring of 2010. Our main findings are: (1) the amount of time devoted to job search declines sharply over the spell of unemployment; (2) the self-reported reservation wage predicts whether a job offer is accepted or rejected; (3) the reservation wage is remarkably stable over the course of unemployment for most workers, with the notable exception of workers who are over age 50 and those who had nontrivial savings at the start of the study; (4) many workers who seek full-time work will accept a part-time job that offers a wage below their reservation wage; and (5) the amount of time devoted to job search and the reservation wage help predict early exits from Unemployment Insurance (UI).

Here is a popular summary of some of the results, including the recommended Figure 4.1 (p. 47 in the paper):

… today’s job seekers seem more picky. According to an analysis of surveys of 6,000 job seekers, the minimum wages that the unemployed are willing to accept are very close to their previous salary and drop little over time, says Mr. Mueller. That could help explain in part why they have so much trouble finding work, he says.

I conclude that some people aren’t very good at looking for jobs and further some people are not very good at accepting job offers.

This paper has many other excellent points and results, see for instance pp.27-29.

For the pointer I thank the excellent Andrew Sweeney.