Category: Law

Jeff Asher on manipulating crime data

Jeff Asher: Within a city, within a district, there are times, either by mistake or by intention, that an agency will manipulate a certain type of crime. There are times where things will get underreported. There will be mistakes. There are times where things will get over reported and there will be mistakes. But because there’s 18,000 individual agencies reporting data, usually when the data is wrong, it’s obviously wrong.

It’s like Chicago reporting, you know, six murders in a month when the city averages over 20, and in some years more than that. Way more than that. It’s when you’ve seen these sharp drops in crime all of a sudden when a data reporting system changed. But to manipulate national crime data would be virtually impossible. I think that’s the value of being able to go and get it from each individual city. You can draw your conclusions and audit agencies that look wrong and still come to the correct conclusion. And I’ll note the FBI has seven major categories of crime that they collect. And there are ten different population groups. Every one of those population groups in every category of crime reported a decline in 2024, per the FBI. So it’s not a big blue city thing. It’s a small city thing. It’s a suburb thing. It’s a rural county thing. It’s a big city thing. It’s everywhere…

But of the 30 cities that reported the most murders to the FBI in 2023, murders are down in 26 of them. We’re seeing a 20% drop in murder, a 10% drop in violent crime, a 13% drop in property crime. Whereas in 2024 murder fell a lot and auto theft fell a lot, now it’s pretty much that everything is falling a considerable amount.

Here is his full dialogue with Paul Krugman. And here is Jeff’s YouTube channel on the same topics. Yes, I know public disorderliness is up in some regards. But do not use that as an excuse to mood affiliation with an extremely negatively view of the trends!

Free the Patient: A Competitive-Federalism Fix for Telemedicine

During the pandemic, many restrictions on telemedicine were lifted, making it far easier for physicians to treat patients across state lines. That window has largely closed. Today, unless a doctor is separately licensed in a patient’s state—or the states have a formal agreement—remote care is often illegal. So if you live in Virginia and want a second opinion from a Mayo Clinic physician in Florida, you may have to fly to Florida, unless that Florida physician happens to hold a Virginia license.

The standard framing says this is a problem of physician licensing. That leads directly to calls for interstate compacts or federalizing medical licensure. Mutual recognition is good. Driver’s licenses are issued by states but are valid in every state. No one complains that Florida’s regime endangers Virginians. But mutual recognition or federal licensing is not the only solution nor the only way to think about this issue.

The real issue isn’t who licenses doctors. It’s that patients are forbidden from choosing a licensed doctor in another state. We can keep state-level licensing, but free the patient. Let any American consult any physician licensed in any state. That’s competitive federalism—no compacts, no federal agency, just patient choice.

A close parallel comes from credit markets. After Marquette Nat. Bank v. First of Omaha (1978), host states could no longer block their residents from using credit cards issued by national banks chartered elsewhere. A Virginian can legally borrow on a South Dakota credit card at South Dakota’s rates. Nothing changed about South Dakota’s licensing; what changed was the prohibition on choice.

Consider Justice Brennan’s argument in this case:

“Minnesota residents were always free to visit Nebraska and receive loans in that state.” It hadn’t been suggested that Minnesota’s laws would apply in that instance, he added. Therefore, they shouldn’t be applied just because “the convenience of modern mail” allowed Minnesotans to get credit without having to visit Nebraska.

Exactly analogously, everyone agrees that Virginia residents are free to visit Florida and be treated by Florida physicians. No one suggests that Virginia’s laws should follow VA residents to Florida. Therefore, VA’s laws shouldn’t be applied just because the convenience of modern online tools allow Virginians to get medical advice and consultation without having to visit Florida.

In short, patients should be allowed to choose physicians as easily as borrowers choose banks.

*Saving Can-Do*

The author is Philip K. Howard and the subtitle is How to Revive the Spirit of America. The book is short, to the point, in the “abundance + state capacity” genre. Excerpt, noting I will not double indent:

“Three major changes are needed to restore the authority to achieve results: a new legal framework, a new institution that can inspire trust in ongoing decisions, and a special commission to design the details of these changes.

New legal framework defining official authority.

Here’s a sketch of what a new infrastructure decision-making framework might look like:

- Separate agencies should be designated as decision-makers for each category of infrastructure. The head of that agency should have authority to approve permits. For federal approvals, all decisions should be subject to White House oversight. For projects with national or reigonal significance, federal decisions should preempty state and local approvals.

- Fifty years of accumulated mandates from multiple agencies should be restated as public goals that can be balanced against other public goals….a recodification commission is needed to reframe thousands of pages of detailed regulatory prescriptions into codes that are goal-oriented and honor public tradeoffs. But unti this canhappen, Congress should authoritze the executive brranch to approve permits “notwithstanding provisions of law to the contrary” — provided the executive branch identifies the relevanto provisions and provides a short statement of why the approvals are in the public interest.

- Processes should be mainly tools for transparency and should be understood by courts as general principles reviewed for abuse of discretion, not as rules requiring strict compliance. NEPA has been effectively rewritten by judicial fitat, so it should be amended to return to its original goals — to provide enviromental transparency, public comment, and a political judgment.

- The jurisdiction of courts must be sharply limited. Lawsuits should be allowed foro approvals that transgress boundaries of executive responsbility, not inadequate review of process, unless these are so deficient as to be arbitrary.

Changing law is always politicall difficult, but the second challenge is perhaps even harder: creating new institutions that can inspire trust.”

TC again: All worth a ponder.

Are Juries Racially Discriminatory?

We implement five different tests of whether grand juries, which are drawn from a representative cross-section of the public, discriminate against Black defendants when deciding to prosecute felony cases. Three tests exploit that while jurors do not directly observe defendant race, jurors do observe the “Blackness” of defendants’ names. All three tests—an audit-study-style test, a traditional outcome-based test, and a test that estimates racial bias using blinded/unblinded comparisons after purging omitted variable bias—indicate juries do not discriminate based on race. Two additional tests indicate racial bias explains at most 0.3 percent of the Black-White felony conviction gap.

That is from a new NBER working paper by

Did the Minnesota housing reform lower housing costs?

Yes:

In December 2018, Minneapolis became the first U.S. city to eliminate single-family zoning through the Minneapolis 2040 Plan, a landmark reform with a central focus on improving housing affordability. This paper estimates the effect of the Minneapolis 2040 Plan on home values and rental prices. Using a synthetic control approach we find that the reform lowered housing cost growth in the five years following implementation: home prices were 16% to 34% lower, while rents were 17.5% to 34% lower relative to a counterfactual Minneapolis constructed from similar metro areas. Placebo tests document these housing cost trajectories were the lowest of 83 donor cities (p=0.012). The results remain consistent and robust to a series of subset analyses and controls. We explore the possible mechanism of these impacts and find that the reform did not trigger a construction boom or an immediate increase in the housing supply. Instead, the observed price reductions appear to stem from a softening of housing demand, likely driven by altered expectations about the housing market.

That is from a new paper by Helena Gu and David Munro. Via the excellent Kevin Lewis.

Why the tariffs are bad

I am delighted to see this excellent analysis in the NYT:

Mr. Tedeschi said that future leaders in Washington, whether Republican or Democrat, may be hesitant to roll back the tariffs if that would mean a further addition to the federal debt load, which is already raising alarms on Wall Street. And replacing the tariff revenue with another type of tax increase would require Congress to act, while the tariffs would be a legacy decision made by a previous president.

“Congress may not be excited about taking such a politically risky vote when they didn’t have to vote on tariffs in the first place,” Mr. Tedeschi said.

Some in Washington are already starting to think about how they could spend the tariff revenue. Mr. Trump recently floated the possibility of sending Americans a cash rebate for the tariffs, and Senator Josh Hawley, Republican of Missouri, recently introduced legislation to send $600 to many Americans. “We have so much money coming in, we’re thinking about a little rebate, but the big thing we want to do is pay down debt,” Mr. Trump said last month of the tariffs.

Democrats, once they return to power, may face a similar temptation to use the tariff revenue to fund a new social program, especially if raising taxes in Congress proves as challenging as it has in the past. As it is, Democrats have been divided over tariffs. Maintaining the status quo may be an easier political option than changing trade policy.

“That’s a hefty chunk of change,” Tyson Brody, a Democratic strategist, said of the tariffs. “The way that Democrats are starting to think about it is not that ‘these will be impossible to withdraw.’ It’s: ‘Oh look, there’s now going to be a large pot of money to use and reprogram.’”

That is from Andrew Duehren, bravo.

The Tragedy of India’s Government-Job Prep Towns

In Massive Rent-Seeking in India’s Government Job Examination System I argued that the high value of government jobs has distorted India’s entire labor market and educational system.

India’s most educated young people—precisely those it needs in the workforce—are devoting years of their life cramming for government exams instead of working productively. These exams cultivate no real-world skills; they are pure sorting mechanisms, not tools of human capital development. But beyond the staggering economic waste, there is a deeper, more corrosive human cost. As Rajagopalan and I have argued, India suffers from premature imitation: In this case, India is producing Western-educated youth without the economic structure to employ them. In one survey, 88% of grade 12 students preferred a government job to a private sector job. But these jobs do not and cannot exist. The result is disillusioned cohorts trained to expect a middle-class, white-collar lifestyle, convinced that only a government job can deliver it. India is thus creating large numbers of educated young people who are inevitably disillusioned–that is not a sustainable equilibrium.

The Economist has an excellent piece on the lives of the students including Kumar who is studying in “Musallahpur Haat, a suburb of Patna where dozens of coaching centers were concentrated, and the rent was cheap.”

…About half a million students are currently preparing for government exams in Musallahpur….For most government departments the initial tests are similar, and have little direct bearing on the job in question. Would-be ticket inspectors and train-drivers must answer multiple-choice questions on current affairs, logic, maths and science. They might be asked who invented JavaScript, or which element is most abundant in the Earth’s crust, or the smallest whole number for a if a456 is divisible by 11. Students have no idea when their preparations might be put to use; exams are not held on a fixed schedule.

…Kumar made his way to the bare, windowless room his friend had arranged for him to rent and started working. Every few days, he’d check the Ministry of Railways website to see if a date had been set for the exams. The days turned into weeks, then months. When the covid pandemic erupted he adjusted his expectations – obviously there would be delays. The syllabus felt infinite and he kept studying, shuttling between libraries, revision tutorials and mock test sessions. Before he knew it he’d been in Musallahpur nearly six years.

As his 30s approached, Kumar began to worry about running out of time. There is an upper age limit for the railway exams – for the ones Kumar was doing it was set at 30. As a lower-caste applicant he was allowed to extend this deadline by three years. His parents urged him to start thinking about alternative careers, but he convinced them to be patient. His father, who was struggling to keep up the allowance, reluctantly sold some of the family’s land to help support him, and Kumar studied harder and longer.

In my post, I emphasized the above-average wages and privileges, which is true enough, but even by Indian standards many of the jobs aren’t great and The Economist puts more focus on respectability and prestige (the sad premature imitation I discussed):

Indian society accords public-sector jobs a special respect. Grooms who have them are able to ask for higher dowries from their brides’ families. “If you are at a wedding and say you have a government job, people will look at you differently,” said Abhishek Singh, an exam tutor in Musallahpur.

Railway jobs in particular still have a vestigial glow of prestige.

…[Kumar] had been preparing for junior engineer and assistant train-driver jobs, but decided to apply for the lowest rung of positions too, the Group D roles, to increase his chance of getting something. An undergraduate degree and six years studying in Patna could lead to him becoming a track-maintenance worker. “I never imagined it would come to this,” he said sadly.

And yet he wouldn’t trade it. A short drive from his room in Musallahpur, a glitzy mall has just been built. There are jobs going there which pay close to what he might earn in a Group D role. But Kumar baulked at the suggestion he might become a barista. “I am educated with a technical degree,” he said. “My family hasn’t sacrificed so much for me to work in a coffee shop. People only work there if they have no other choice.” No one from his parents’ generation would respect a barista. But they admired, or at least understood, a job on the railways.

India’s government job system squanders talent, feeds on obsolete and socially-inefficient prestige hierarchies, and rewards years of sterile preparation with diminishing returns. It’s inefficient, of course, but behind the scenes it’s devastating to the young.

Hat tip: Samir Varma.

Say it ain’t so, Cecil…

New British cars may have to be fitted with breathalyser technology and black box-style recorders under Labour plans to align with EU vehicle safety laws.

The Government said copying European rules would drive down costs…

Here is the full Telegraph article. It seems more complicated than that, instead the car has to allow for the possibility of installation of such a device, without the use of the device, or the device, being required per se. So the black box is more concerning to me. It would mean that a complete monitoring of your whereabouts and driving behavior could become possible. There are Event Data Recorders in most newer US cars, but to date they are not used for very much. Perhaps the American ethos prevents slippery slope on this one?

These are not just extreme paranoid fears. When driving with a Spanish rental car this summer, the car issued an annoying, recurring beep every time it was being driven over the speed limit, even by small amounts. For one thing, the road synchs with the beeping device do not always accurately reflect the posted speed limits. For another, often the speed limit would suddenly fall by 20km, but of course you should decelerate rather than slamming on the brakes. For another, it can be dangerous to always drive below or even at the speed limit, especially when overtaking and I do mean sane rather than crazy overtaking.

So on these issues matters could indeed get much worse.

Red Flags for Waymo in Boston

The British Locomotives Act of 1865 contained a red flag provision for cars:

…while any Locomotive is in Motion, [one of the three required attendants] shall precede such Locomotive on Foot by not less than Sixty Yards, and shall carry a Red Flag constantly displayed, and shall warn the Riders and Drivers of Horses of the Approach of such Locomotives, and shall signal the Driver thereof when it shall be necessary to stop, and shall assist Horses, and Carriages drawn by Horses, passing the same.

Not to be outdone the Boston City Council is debating a law on self-driving cars that includes:

Section 6. Human Safety Operator

Any permit process must include the following requirements: (a) an Autonomous Vehicle operating in the City of Boston shall not transport passengers or goods unless a human safety operator is physically present in the vehicle and has the ability to monitor the performance of the vehicle and intervene if necessary, including but not limited to taking over immediate manual control of the vehicle or shutting off the vehicle; and (b) that Autonomous Vehicles and human safety operators must meet all applicable local, state and federal requirements.

The EU-USA trade deal

Sorry people, but you can fill in the links with Perplexity and Grok, both great for this purpose.

Olivier Blanchard is upset that Europe got such a raw deal, various people in the FT agree. I would say that is itself data about broader European economic and security policies, and needs to be taken very seriously. The Europeans are not stupid negotiators by any means, rather they are in a weak negotiating position for reasons that are largely their own fault and reflect underlying weaknesses of their basic economic and political model.

You can hate what Trump did, but for a “stupid” administration they, by their own standards at least, did a remarkably good job of it.

Justin Wolfers seems upset that Trump is raising taxes on Americans. (I am too!) But that feels kind of weird to me. And it is nice to see that Europeans get somewhat lower taxes, though many European leaders are upset about that. They should in fact buy more from the United States, and their non-tariff barriers are significant.

Conor Sen notes that the USA has come up with a multi-trillion revenue source that does not seem to diminish corporate profitability, https://x.com/conorsen/status/1949785522567549283?s=61, and he is wondering how exactly people will react to that.

We can all agree that negative externalities are what should be taxed!

But those policies typically are unpopular, so in some instances you will understand public affairs more clearly by switching to the “what will be done?” perspective, rather than the “what should be done?” stance.

My best guess is that these tariffs will stick for the most part, and that you are seeing some early major steps for how the U.S. will resolve its fiscal position. Higher inflation will come too, and fiscally we will muddle through, albeit with notably lower real wages.

(To be clear, for a long time I have stated that I prefer to cut back on government-subsidized health care, rather than to lower real wages through these other means. You can always use the extra money and try to buy back some health! But I also never have thought I was going to get my way. When Matt Yglesias tells you that “health care polls well,” you should take that seriously and Matt also should realize a bit that puts him in more of the pro-Trump, pro-tariff camp than he might like to think.)

I think a Democratic administration, whenever we get one next, would rather spend the revenue from the tariffs than repeal them. By then the tariffs also will be what I call “emotionally internalized.” And the Democrats have not loved free trade for a long time anyway, despite their current rhetorical moves toward criticizing the Trumpers.

So most of all we need to revise our estimates of what the political equilibrium looks like here. We are receiving major pieces of information, and we must update our vision of the world to come.

The Rising Cost of Child and Pet Day Care

Everyone talks about the soaring cost of child care (e.g. here, here and here), but have you looked at the soaring cost of pet care? On a recent trip, it cost me about $82 per day to board my dog (a bit less with multi-day discounts). And no, that is not high for northern VA and that price does not include any fancy options or treats! Doggie boarding costs about about the same as staying in a Motel 6.

Many explanations have been offered for rising child care costs. The Institute for Family Studies, for example, shows that prices rise with regulations like “group sizes, child-to-staff ratios, required annual training hours, and minimum educational requirements for teachers and center directors.” I don’t deny that regulation raises prices—places with more regulation have higher costs—but I don’t think that explains the slow, steady price increase over time. As with health care and education, the better explanation is the Baumol effect, as I argued in my book (with Helland) Why Are the Prices So Damn High?

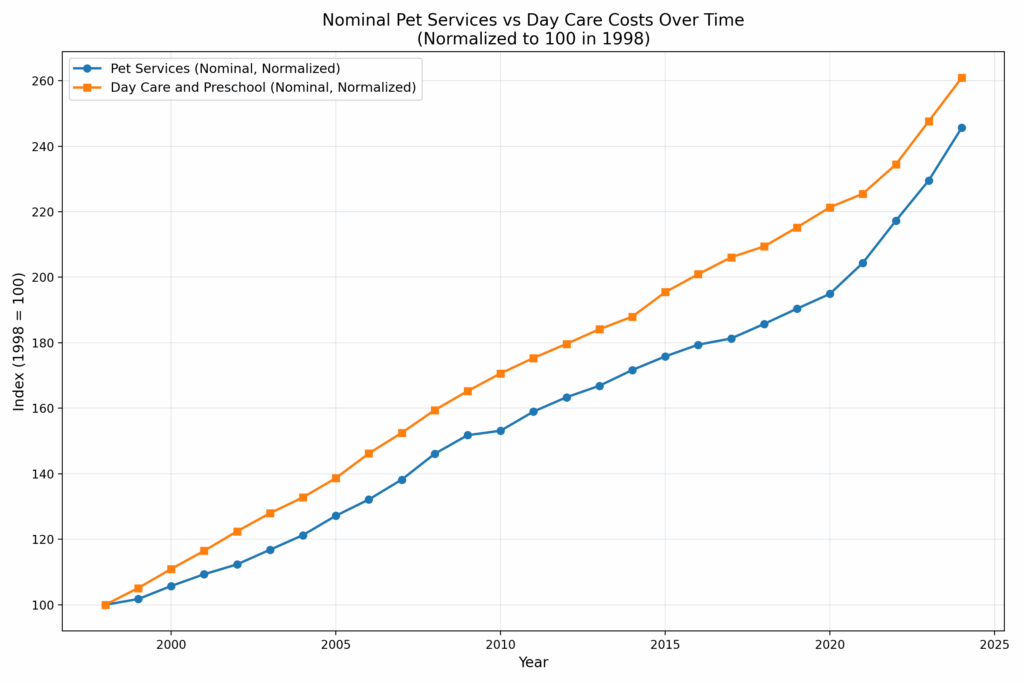

Pet care is less regulated than child care, but it too is subject to the Baumol effect. So how do price trends compare? Are they radically different or surprisingly similar? Here are the two raw price trends for pet services (CUUR0000SS62053) and for (child) Day care and preschool (CUUR0000SEEB03). Pet services covers boarding, daycare, pet sitting, walking, obedience training, grooming but veterinary care is excluded from this series so it is comparable to that for child care.

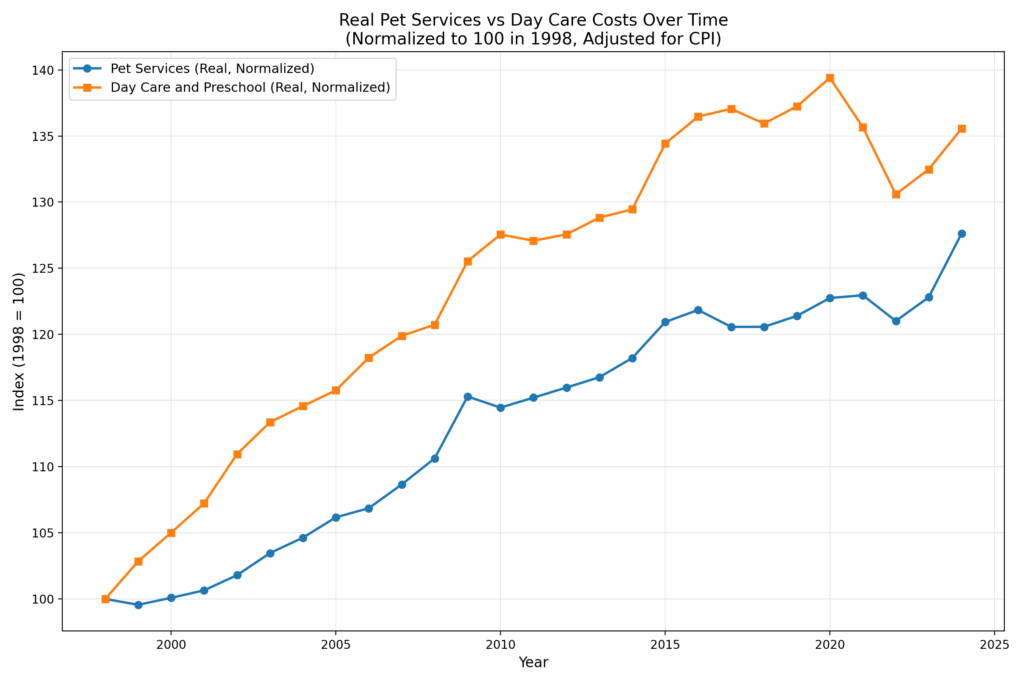

As you can see, the trends are nearly identical, with child care rising only slightly faster than pet care over the past 26 years. Of course, both trends include general inflation, which visually narrows the gap. When we normalize to the overall CPI, we get the following:

Over 26 years, the real (relative) price of Day Care and Preschool has increased 36%, while Pet Services have risen 28%. If regulation doesn’t explain the rise in pet care costs–and it probably doesn’t–then regulation probably doesn’t explain the rise in child care costs either. After all, child and pet care are very similar goods!

The similar rise in the price of child day care and pet day care/boarding is consistent with Is American Pet Health Care (Also) Uniquely Inefficient? by Einav, Finkelstein and Gupta, who find that spending on veterinary care is rising at about the same rate as spending on human health care. Since the regulatory systems of pet and human health care are very different this suggests that the fundamental reason for rising health care isn’t regulation but rising relative prices and increasing incomes (fyi this is also an important reason why Americans spend more on health care than Europeans).

Thus, my explanation for rising prices in child care and pet care is that productivity is increasing in other industries more than in the care industries which means that over time we must give up more of other goods to get child and pet care. In short, if productivity in other sectors rises while child/pet care productivity stays flat, relative prices must rise. Another way to put this is that to retain workers, wages in stagnant-productivity sectors must rise to match those in (equally labor-skilled) high-productivity sectors. That means paying more for the same level of care, simply to keep the labor force from leaving

But rising productivity in other sectors is good! Thus, I always refer to the Baumol effect rather than the “cost disease” because higher prices are not bad when they reflect changes in relative prices. As with education and health care the rising price of child and pet care isn’t a problem for society as whole. We are richer and can afford more of all goods. It can be a problem, however, for people who consume more than the average quantities of the service-sector goods and people who have lower than average wage gains. So what can we do? Redistribution is one possibility.

If we focus on the prices, the core problem is that care work is labor-intensive and labor has a high opportunity cost. One solution is to lower the opportunity cost of that labor. Low-skill immigration helps: when lower-wage workers take on support roles, higher-wage workers can focus on higher-value tasks. As I’ve put it, “The immigrant who mows the lawn of the nuclear physicist indirectly helps to unlock the secrets of the universe.” Same for the immigrant who provides boarding for the pets of the nuclear physicist.

Another solution is capital substitution—automation, AI, better tools. But care jobs resist mechanization; that’s part of why productivity growth is so slow in these sectors. Still, the basic truth remains: if we want more affordable day care—for kids or pets—we need to use less of what’s expensive: skilled labor. That means either importing more people to do the work, or investing harder in ways to do it with fewer hands.

Claims about DOGE and AI

The tool has already been used to complete “decisions on 1,083 regulatory sections” at the Department of Housing and Urban Development in under two weeks, according to the PowerPoint, and to write “100% of deregulations” at the Consumer Financial Protection Bureau (CFPB). Three HUD employees — as well as documents obtained by The Post — confirmed that an AI tool was recently used to review hundreds, if not more than 1,000, lines of regulations at that agency and suggest edits or deletions.

Here is the full story, I will keep you all posted…

Should Catalonia receive more financial independence?

Jesús details how Spain already operates one of the most decentralized fiscal systems in the world, “more latitude than most U.S. states,” he notes, yet Catalonia now seeks the bespoke privileges long enjoyed by the Basque Country and Navarra. The Regional Authority Index rates how much self‑rule and shared rule each country’s sub‑national governments actually wield. In its last update the index places Spain as the most decentralized unitary state in the sample and fourth overall among 96 countries.

Those northern provinces collect every euro on their own soil and forward a modest remittance to the central treasury, a setup that Fernández‑Villaverde brands “a Confederate relic.” Extending it to Catalonia, he argues, would hollow out Spain’s common‑pool finances, deepen inter‑regional resentment and erode the principle of equal citizenship, while turning the national revenue service into little more than a mailbox for provincial checks.

That is from the episode summary of a podcast of Rasheed Griffith with Jesús Fernandez-Villaverde. On the Catalan language, matters look grim in any case:

Right now around only 55% of births in Catalonia are born from a mother that was born, actually not even Catalan, that was born in Spain. That basically tells you that only 40, 45%, perhaps even a little bit less of mothers that were born in Spain speak Catalan at home. At this moment, I will say that less than 30, 28% of kids born in Cataluña, perhaps even less, will speak Catalan at home.

It amazes me how many people ignore the reality that a host of leading economists led or endorsed a constitution-violating movement to separate Catalonia from the rest of Spain and not long ago. The podcast will tell you more. It is also interesting throughout, including on Spanish history since the 19th century.

Horseshoe Theory: Trump and the Progressive Left

Many of Trump’s signature policies overlap with those of the American progressive left—e.g. tariffs, economic nationalism, immigration restrictions, deep distrust of elite institutions, and an eagerness to use the power of the state. Trump governs less like Reagan, more like Perón. As Ryan Bourne notes, this ideological convergence has led many on the progressive left to remain silent or even tacitly support Trump policies, particularly on trade.

“[P]rogressive Democrats like Senator Elizabeth Warren have chosen to shift blame for Trump’s tariff-driven price hikes onto large businesses. Last week, they dusted off—and expanded—their pandemic-era Price Gouging Prevention Act. While bemoaning Trump’s ‘chaotic’ on-off tariffs, their real ire remains reserved for ‘greedy corporations,’ supposedly exploiting trade policy disruption to pad prices beyond what’s needed to ‘cover any cost increases.’

…The Democrats’ 2025 gouging bill is broader than ever, creating a standing prohibition against ‘grossly excessive’ price hikes—loosely suggested at anything 20 percent above the previous six-month average—but allowing the FTC to pick its price caps ‘using any metric it deems appropriate.’

…Instead of owning the pricing fallout from his trade wars, President Trump can now point to Democratic cries of ‘corporate greed’ and claim their proposed FTC crackdown proves that it’s businesses—not his tariffs—to blame for higher prices.

If these progressives have their way, the public debate flips from ‘tariffs raise prices’ to ‘the FTC must crack down on corporate greed exploiting trade policy reform,’ with Trump slipping off the hook.”

Trump’s political coalition isn’t policy-driven. It’s built on anger, grievance, and zero-sum thinking. With minor tweaks, there is no reason why such a coalition could not become even more leftist. Consider the grotesque canonization of Luigi Mangione, the (alleged) murderer of UnitedHealthcare CEO Brian Thompson. We already have a proposed CA ballot initiative named the Luigi Mangione Access to Health Care Act, a Luigi Mangione musical and comparisons of Mangione to Jesus. The anger is very Trumpian.

A substantial share of voters on the left and the right increasingly believe that markets are rigged, globalism is suspect, and corporations are the real enemy. Trump adds nationalist flavor; progressives bring the regulatory hammer. The convergence of left and right in attacking classical liberalism– open markets, limited government, pluralism and the basic rules of democratic compromise–is what worries me the most about contemporary politics.

My excellent Conversation with Helen Castor

Here is the audio, video, and transcript. Here is part of the episode summary:

Tyler and Helen explore what English government could and couldn’t do in the 14th century, why landed nobles obeyed the king, why parliament chose to fund wars with France, whether England could have won the Hundred Years’ War, the constitutional precedents set by Henry IV’s deposition of Richard II, how Shakespeare’s Richard II scandalized Elizabethan audiences, Richard’s superb artistic taste versus Henry’s lack, why Chaucer suddenly becomes possible in this period, whether Richard II’s fatal trip to Ireland was like Captain Kirk beaming down to a hostile planet, how historians continue to discover new evidence about the period, how Shakespeare’s Henriad influences our historical understanding, Castor’s most successful work habits, what she finds fascinating about Asimov’s I, Robot, the subject of her next book, and more.

Here is an excerpt from the opening sequence:

COWEN: Richard II and Henry IV — they’re born in the same year, namely 1367. Just to frame it for our listeners, could you give us a sense — back then, what was it that the English government could do and what could it not do? What is the government like then?

CASTOR: I think people might be surprised at quite how much government could do in England at this point in history because England, at this point, was the most centralized state in Europe, and that has two reasons. One is the Conquest of 1066 where the Normans have come in and taken the whole place over. Then, the other key formative period is the late 12th century when Henry II is ruling an empire that stretches from the Scottish border all the way down to southwestern France.

He has to have a system of government and of law that can function when he’s not there. By the late 14th century, when Richard and Henry — my two kings in this book — appear on the scene, the king has two key functions which appear on the two sides of his seal. On one side, he sits in state wearing a crown, carrying an orb and scepter as a lawgiver and a judge. That is a key function of what he does for his people. He imposes law. He gives justice. He maintains order.

On the other side of the seal, he’s wearing armor on a warhorse with a sword unsheathed in his hand. That’s his function as a defender of the realm in an intensely practical way. He has to be a soldier, a warrior to repel attacks or, indeed, to launch attacks if that’s the best form of defense. To do that, he needs money.

For that, the institution of parliament has developed, which offers consent to taxation that he can demonstrate is in the national interest. It has also come to be a law-making forum. Wherever he needs to make new laws, he can make statute law in Parliament that therefore, in its very nature, has the consent of the representatives of the realm.

COWEN: What is it, back then, that government cannot do?

CASTOR: What a government doesn’t have in the medieval period is, it doesn’t have a monopoly of force. In other words, it doesn’t have a police force. It doesn’t have a professional police force, and it doesn’t have a standing army, or at least by the late Middle Ages, England does have a permanent garrison in Calais, which is its outpost on the northern coast of France, but that’s not a garrison that can be recalled to England with any ease.

So, enforcement is the government’s key problem. To enforce the king’s edicts, it therefore relies on a hierarchy of private power on the landed, the great landowners of the kingdom, who are wealthy because of their possession of land, but crucially, also have control over people, the men who live and work on their land. If you need to get an enforcement posse — this is medieval English language that we use when we talk of sheriffs and posses — the county posse, the power of the county.

If you need to get men out quickly, you need to tap into those local power structures. You don’t have modern communications. You don’t have modern transport. The whole hierarchy of the king’s theoretical authority has to tap into and work through the private hierarchy of landed power.

COWEN: Why do those landed nobles obey the king? They’re afraid of the future raising of an army? Or they’re handed out some other benefit? What keeps the incentives all working together to the extent they stay working together?

CASTOR: They have a very important pragmatic interest in obeying the king because the king is the keystone of the hierarchy within which they are powerful and wealthy. Of course, they want more power and more wealth for themselves and for their dynasty, but importantly, they don’t want to risk everything to acquire more if it means serious danger that they might lose what they already have.

They have every interest in maintaining the hierarchy as it already is, within which they can then . . . It’s like having a referee…

A very good episode, definitely recommended. I enjoyed all of Helen’s books, most notably the recent